Amazon Price Scraping with Google Sheets

Amazon’s massive product ecosystem makes it a goldmine for price tracking, competitive analysis, and market research. This guide covers methods for Amazon price scraping, from small-scale tracking to enterprise-grade solutions, plus how to import data into Google Sheets for real-time analysis. Whether you’re hunting deals or analyzing eCommerce trends, we’ve got you covered.

Applications of Amazon price scraping in Google Sheets

Amazon prices are constantly changing, and keeping up can be a challenge. But staying on top of these shifts can give you a real advantage. With automated price scraping, you can:

- Track price changes over time – monitor price fluctuations to know the best time to buy or adjust your pricing strategy.

- Compare prices across different products – quickly spot which items offer the best value to make informed purchasing decisions.

- Set up price drop alerts – automate alerts to catch discounts as soon as they occur. You won't miss out on deals anymore.

- Analyze pricing trends – identify patterns in price movements and use the insights to optimize your business or personal shopping strategy.

- Keep an eye on competitors – stay ahead by tracking competitor pricing and adjusting your own prices accordingly.

Next, let’s see how to automate this process and have the data updated directly in Google Sheets.

Step-by-step guide to scraping Amazon prices into Google Sheets

Let’s discuss the methods to automate the extraction of product pricing data from the Amazon website to Google Sheets.

Tools and prerequisites

This guide is beginner-friendly, so no advanced coding experience is needed. However, having some familiarity with the following can be helpful:

- Basic knowledge of Google Sheets.

- JavaScript or Python (optional but helpful).

- Understanding of Chrome browser developer tools.

Now, let's explore three data scraping methods, starting with the first.

Method #1: using ImportXML

The IMPORTXML function in Google Sheets allows you to extract data from web pages using XPath queries. This no-code solution is perfect for beginners.

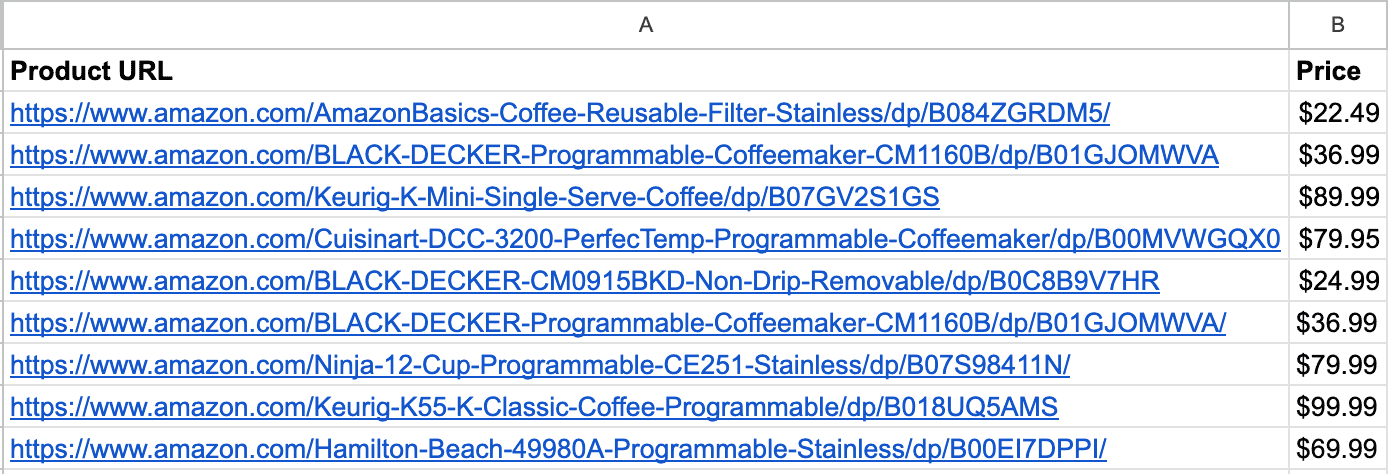

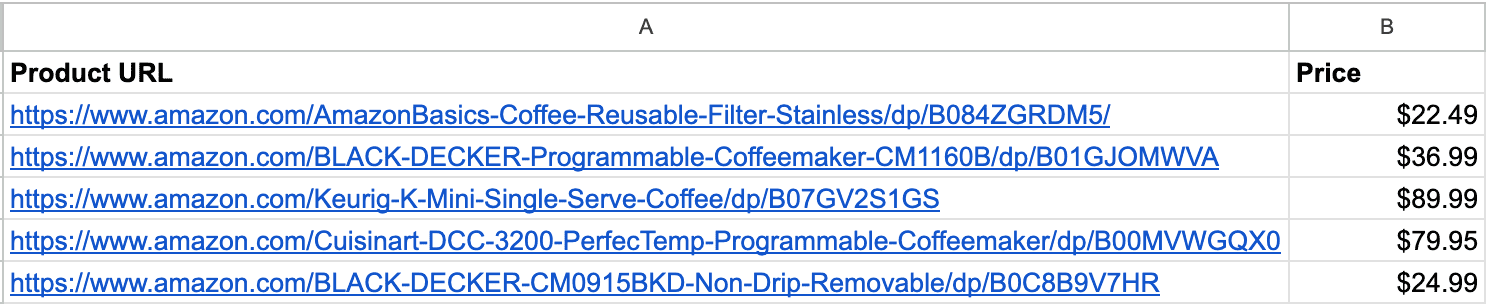

1. Open Google Sheets

Start a new sheet and create two columns:

- Column A – Product URL

- Column B – Price

Enter Amazon product URLs in column A (e.g., cell A2).

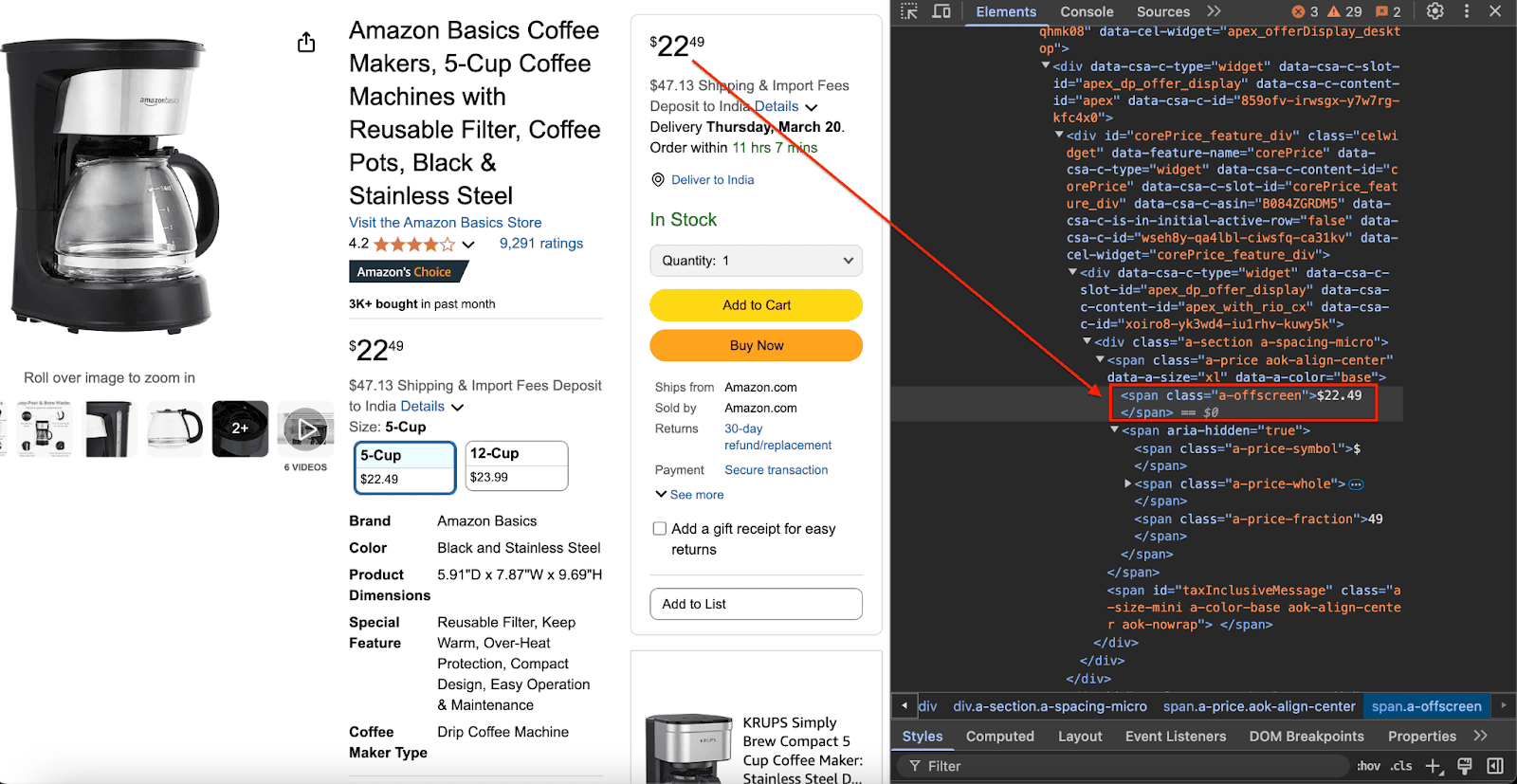

2. Identify the XPath of the price element

- Open an Amazon product page.

- Right-click the price and select Inspect to open the browser’s Developer Tools.

- Find the HTML element containing the price, it's typically stored in a <span> element with the class a-offscreen.

The XPath will be: //span[@class='a-offscreen'].

3. Use the IMPORTXML formula

In cell B2 (the Price column), enter the following formula to extract the first matching price:

=INDEX(IMPORTXML(A2, "//span[@class='a-offscreen']"), 1)

- The IMPORTXML function extracts all elements matching the XPath.

- The INDEX function ensures only the first value is returned.

If successful, B2 will display the product price.

4. Scrape multiple products

Add more URLs in column A and drag the formula down to column B. Each row will display the price for its corresponding product.

Note – if a cell shows #NA, it may indicate that Amazon’s anti-scraping measures were triggered or the price element didn't appear properly.

Learn more about how to scrape data from a website into Google Sheets.

Method #2: using Google Apps Script

For extra control and flexibility, Google Apps Script is a great option.

Step 1. Create a new Google Sheet

- Column A – Product URLs

- Column B – Price data (initially empty)

Step 2. Open the script editor

- Click Extensions and then Apps Script in the menu.

- Delete any default code.

Step 3. Add the following script

Paste this script into the editor:

function scrapeAmazonPrices() {var sheet = SpreadsheetApp.getActiveSpreadsheet().getActiveSheet();var lastRow = sheet.getLastRow();var urls = sheet.getRange("A2:A" + lastRow).getValues();for (var i = 0; i < urls.length; i++) {var url = urls[i][0];if (!url) continue;try {var response = UrlFetchApp.fetch(url, {muteHttpExceptions: true,headers: {// These headers help mimic a real browser request to avoid blocking'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/133.0.0.0 Safari/537.36','Accept-Language': 'en-US,en;q=0.9', // Specifies preferred language'Accept': 'text/html,application/xhtml+xml,application/xml', // Acceptable content types'Referer': 'https://www.google.com/' // Makes it appear as if you came from Google}});var content = response.getContentText();var priceMatch = content.match(/<span class="a-offscreen">([^<]+)<\/span>/);var price = priceMatch ? priceMatch[1].trim() : "Price not found";sheet.getRange(i + 2, 2).setValue(price); // Writes price to column BUtilities.sleep(1000); // Adds delay between requests to avoid rate limiting} catch (e) {sheet.getRange(i + 2, 2).setValue("Error");}}}function onOpen() {SpreadsheetApp.getUi().createMenu('Amazon').addItem('Get Prices', 'scrapeAmazonPrices').addToUi();}

The code does the following:

- Fetches product URLs from Column A and sends requests to Amazon.

- Extracts prices using a regular expression and updates Column B.

- The User-Agent and other headers mimic a real browser to reduce blocking.

Step 4. Run the script

Save and click Run to execute the script. The prices will appear in column B:

Step 5. Schedule regular updates (optional)

Set up triggers in the Apps Script dashboard to run the script at regular intervals for continuous data updates.

Note – if a cell shows Price not found, it may indicate that Amazon’s anti-scraping measures were triggered or the price element didn't appear properly.

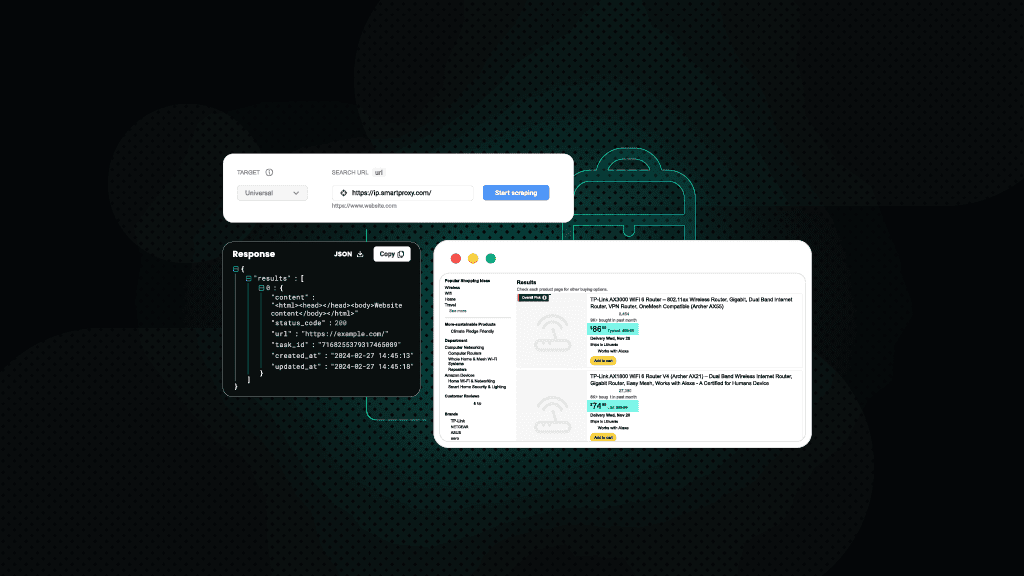

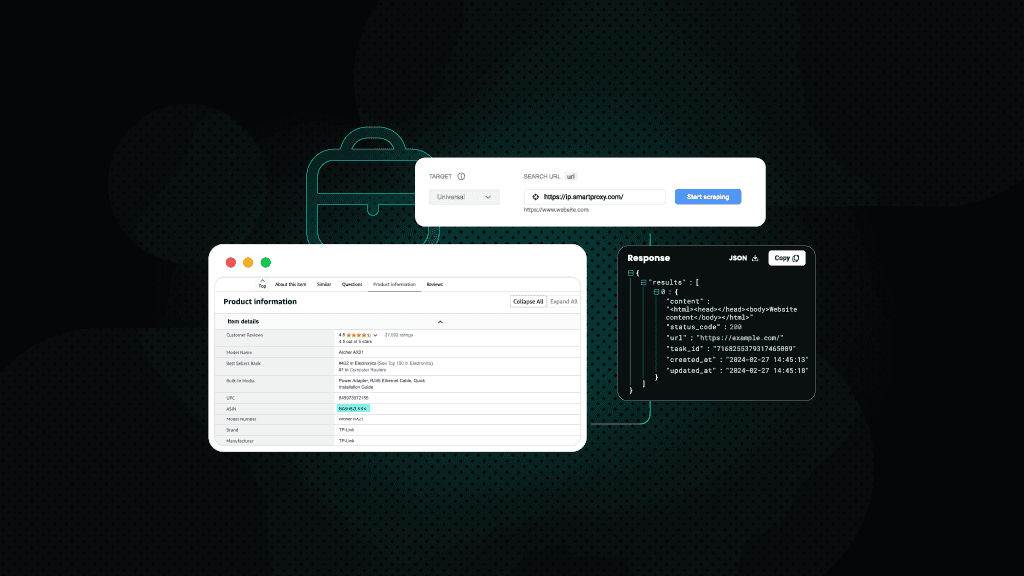

Method #3: using APIs or third-party tools

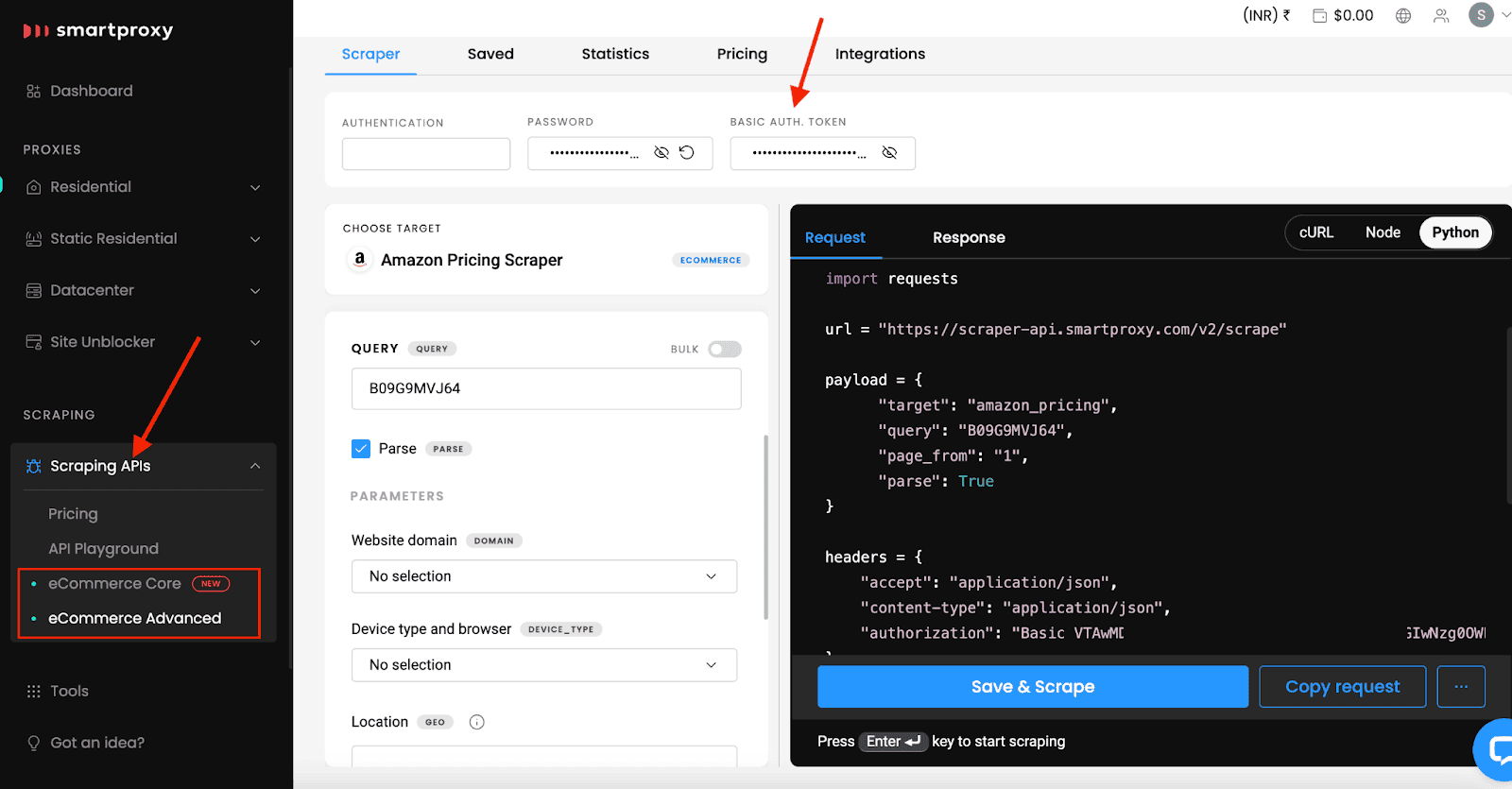

For advanced users who need a scalable, anti-bot solution beyond Google Sheets, Smartproxy's eCommerce Scraping API is a powerful option. This API allows you to extract Amazon pricing data in seconds while bypassing Amazon anti-bot measures.

To try it out:

- Sign up for a Smartproxy account.

- Navigate to the Scraping APIs section in the dashboard.

- Go to the Pricing section, select the eCommerce tab, and choose a subscription plan. You can also activate your 7-day free trial with 1K requests!

- After subscribing, locate your Auth Token in the eCommerce Core or eCommerce Advanced tab, depending on your plan.

Here's a simple Python implementation:

import requestsurl = "https://scraper-api.smartproxy.com/v2/scrape"payload = {"target": "amazon_pricing","query": "B084ZGRDM5","domain": "com","page_from": "1","parse": True,}headers = {"accept": "application/json","content-type": "application/json","authorization": "Basic YOUR_AUTH_TOKEN",}response = requests.post(url, json=payload, headers=headers)print(response.text)

Let’s break down the parameters:

- target – Inform the API that we want Amazon price data.

- query – This is the ASIN (Amazon Standard Identification Number). Replace this with the ASIN of the product you want to scrape.

- domain – Specifies the Amazon region (e.g., "com" for the US).

- page_from – Specifies the search result page to scrape.

- parse – Enables automatic parsing, meaning the API will return structured data instead of raw HTML.

Important: Remember to replace YOUR_AUTH_TOKEN with your actual token from the dashboard.

The API returns structured JSON data:

{"results": [{"content": {"results": {"url": "https://www.amazon.com/gp/aod/ajax/ref=dp_aod_unknown_mbc?asin=B084ZGRDM5&pageno=1","asin": "B084ZGRDM5","title": "Amazon Basics Coffee Makers, 5-Cup Coffee Machines with Reusable Filter, Coffee Pots, Black & Stainless Steel","pricing": [{"price": 22.49,"seller": "Amazon.com","currency": "USD","condition": "New"/* Additional options omitted */},{"price": 17.77,"seller": "Amazon Resale","currency": "USD","condition": "Used - Acceptable"}/* ... */],"review_count": 9291}/* Response metadata omitted */}}]}

If you prefer a different programming language, head to the dashboard and customize the parameters there. Smartproxy automatically generates the code in various languages, including Node.js, cURL, and Python.

Want to continue exploring the advanced settings of our Scraping APIs? Explore our dev-friendly documentation.

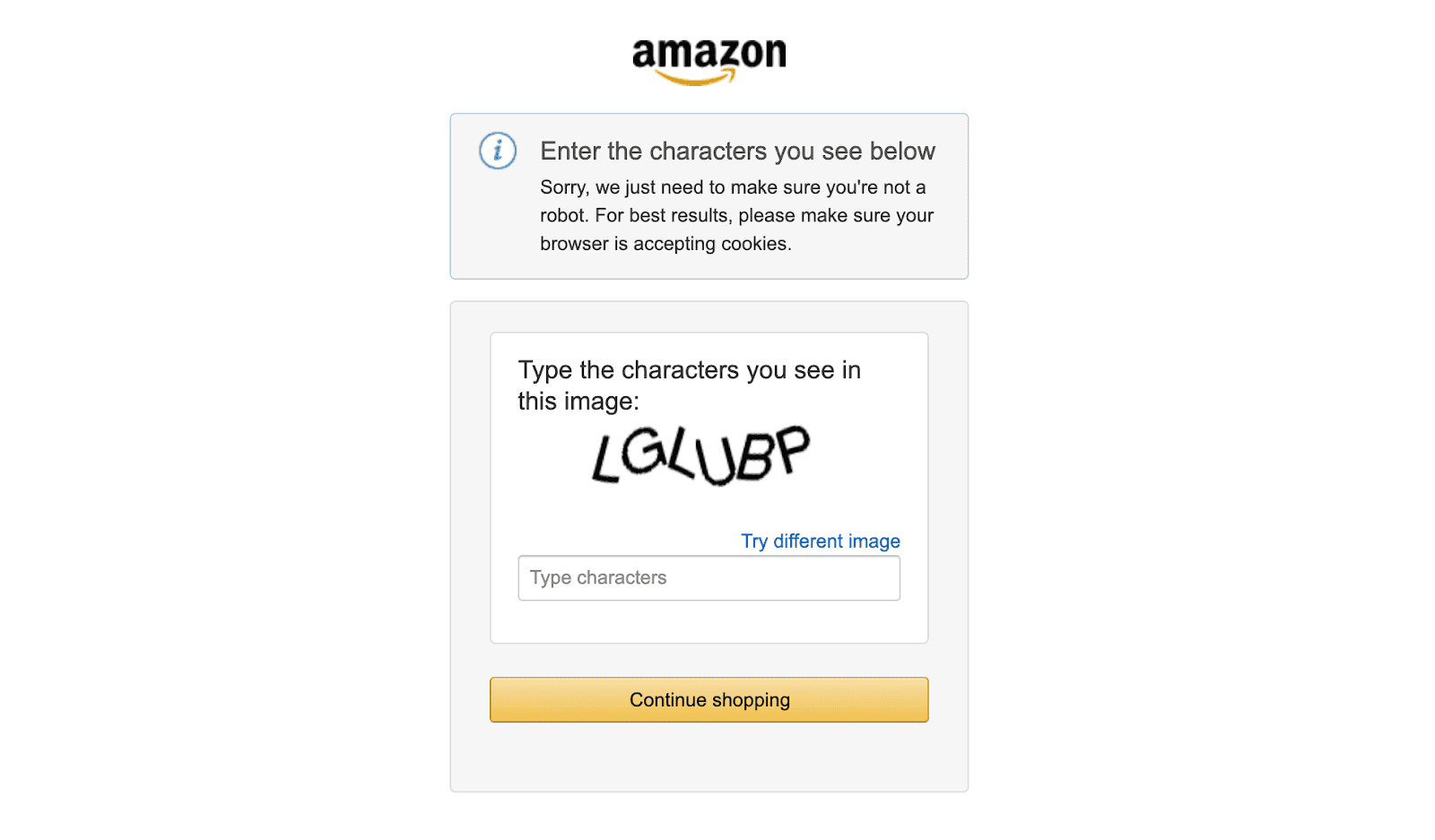

Dealing with challenges and limitations

Amazon employs several anti-scraping measures to protect its data, which can lead to challenges like blocked access or disrupted scraping.

While collecting the data from Amazon, you might face a few challenges:

- IP blocks – if you send too many requests too quickly, Amazon will flag your IP and block further access.

- CAPTCHAs – Amazon leverages CAPTCHAs to distinguish real users from bots. When a CAPTCHA appears, it halts your scraping process unless you have a way to bypass it.

- Rate limiting – the platform restricts how many requests you can make in a short time. Exceeding this limit can trigger temporary IP bans or CAPTCHA prompts.

- Frequent layout changes – Amazon updates its DOM structure, which can break your scraping scripts. XPath and CSS selectors need frequent updates to keep working.

The easiest way to avoid these headaches? Use solutions like the Smartproxy Amazon Scraper API. It handles bans, CAPTCHAs, and rate limits for you so you get clean, structured data without the hassle.

Best practices for web scraping Amazon

If you're scraping Amazon price data into Google Sheets, here are some key web scraping best practices to keep in mind:

- Rotate proxies – use a rotating proxy service to distribute requests across multiple IPs, reducing the likelihood of getting blocked.

- Modify user-agent headers – customize your user agent to make requests appear as if they're coming from a real browser, lowering detection risks.

- Throttle your requests – implement delays between requests to avoid scraping too quickly, which can trigger rate limiting or IP bans.

- Randomize request patterns – introduce variable delays and non-linear scraping sequences (clicks, mouse movements) to mimic human browsing behavior and avoid detection.

- Monitor HTML structure changes – regularly verify your XPath or CSS selectors as Amazon frequently updates its DOM structure, which can break your scraper.

- Implement error handling – build robust exception handling to manage failed requests, timeouts, and unexpected response formats to ensure continuous operation.

Bottom line

Scraping Amazon prices into Google Sheets can be done in several ways. For example, you can use methods like IMPORTXML or Google Apps Script to extract the data you need. However, these methods aren’t ideal for large-scale scraping. Scraping Amazon prices at scale comes with challenges like CAPTCHAs, IP blocks, and frequent changes to the website’s structure.

If you don’t want to spend time fixing broken scripts or troubleshooting bans, Smartproxy’s Amazon Scraper API can save you the hassle. Just send a request and get structured data.

Collect data from Amazon in seconds!

Test out our Scraping API solution with a free 7-day trial and 1K requests.

About the author

Lukas Mikelionis

Senior Account Manager

Lukas is a seasoned enterprise sales professional with extensive experience in the SaaS industry. Throughout his career, he has built strong relationships with Fortune 500 technology companies, developing a deep understanding of complex enterprise needs and strategic account management.

Connect with Lukas via LinkedIn.

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.