Get Started With SERP Scraping API

Scrape data from major search engines with our full-stack tool. Use our guide to get started with SERP data collection now.

14-day money-back option

Any country, state, or city

100% success rate

Results in HTML or JSON

Headless scraping

Synchronous or asynchronous requests

Real-time integration

Proxy-like integration

Free 7-day trial

What is SERP Scraping API?

Our SERP Scraping API is designed for gathering data from search engines such as Google, Bing, Baidu, and Yandex. This tool combines 65M+ built-in proxies, an advanced web scraper, and a data parser, enabling you to retrieve real-time raw HTML or structured JSON data.

Most popular SERP Scraping API use cases

Gain insights into the latest and most relevant SERP data with our advanced scraper.

Research SEO

Extract paid and organic search results on search volume, keyword competition, related terms, and most popular topics.

Monitor brands

Track how you and your competitors are doing by collecting brand mentions, featured snippets, ranking positions, and more.

Generate leads

Discover your potential customers by efficiently gathering contact information straight from search result pages.

How to set up SERP Scraping API

Get started with your SERP Scraping API configuration by following our guide.

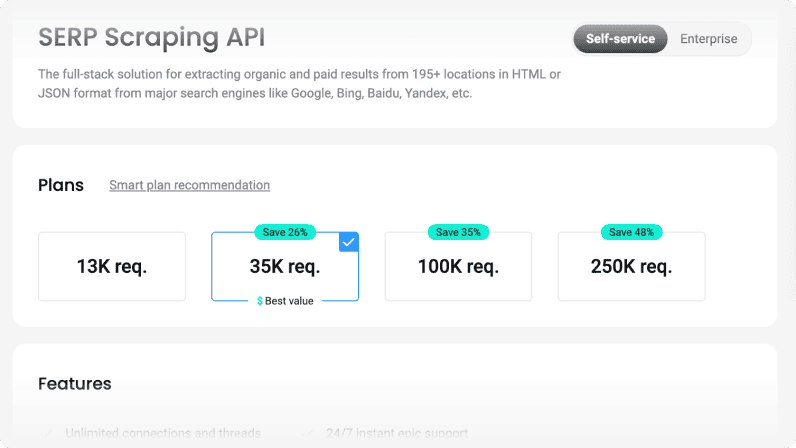

Choose a subscription

After creating your account, find the SERP section under the Scraping side menu.

Next, click the Pricing tab and select a subscription plan that suits your needs. Alternatively, start a 7-day free trial.

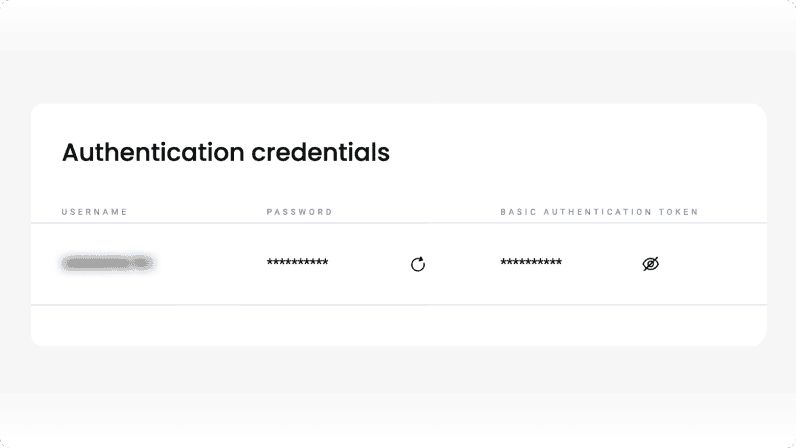

Authenticate with username:password

In the API Authentication tab, click on either the username or password to copy it. Use the buttons on the right to reveal your password or generate a new one.

Create a request command

Go to the Scrapers tab, where you can create a new project or use a ready-made scraper from the Popular Scrapers section.

When creating a new project, select a target, then enter the URL or query you wish to scrape. If applicable, choose the relevant parameters. You can find more parameter options in our help documentation.

On the right, you can copy the code in cURL, Node.js, or Python for use in your own environment.

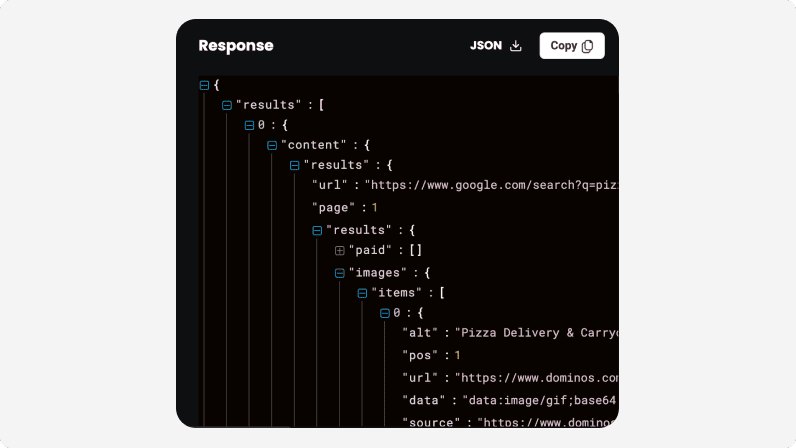

Alternatively, click Save and Scrape to send your request directly. Once the task is complete, the response will be displayed on the screen.

Copy or download the result in JSON or CSV

After sending a request using the Scrapers capabilities on the dashboard, you can copy or download the response in JSON or CSV.

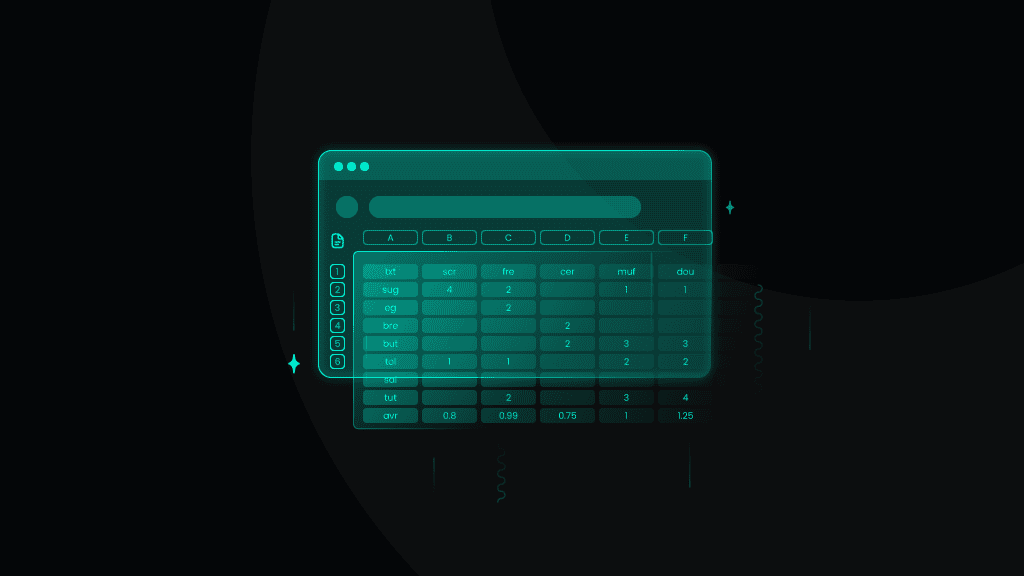

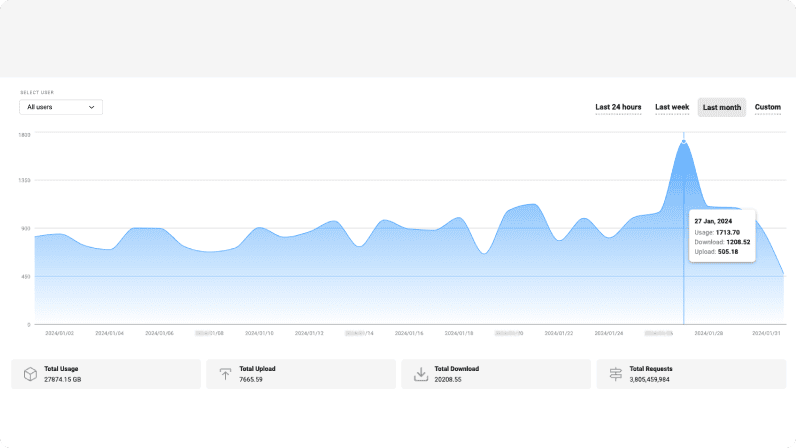

Track scraping usage

To track your scraping API usage, navigate to the Statistics tab. There, you’ll find traffic usage details over a selected timeframe.

Easy to integrate scraper

Our SERP Scraping API works with all popular programming languages, ensuring a smooth connection to other tools in your business suite.

import requestsurl = "https://scraper-api.smartproxy.com/v2/scrape"payload = {"target": "google_search","query": "pizza","page_from": "1","num_pages": "10","google_results_language": "en","parse": True}headers = {"accept": "application/json","content-type": "application/json","authorization": "Basic [YOUR_BASE64_ENCODED_CREDENTIALS]"}response = requests.post(url, json=payload, headers=headers)print(response.text)

Free tools, same great user-friendliness

X Browser

Juggling multiple profiles has never been easier. Get unique fingerprints and use as many browsers as you need, risk-free!

Chrome Browser Extension

Easy-to-use, damn powerful. A proxy wonderland in your browser, accessible in 2 clicks. Free of charge.

Firefox Browser Add-on

Easy to set up, even easier to use. The virtual world at your fingertips in 2 clicks. Free of charge.

Proxy Checker

Verify your IPs with free Proxy Checker. Quickly & efficiently check your IPs to avoid potential errors.

Smartproxy blog

Most recent

What is Data Scraping? Definition and Best Techniques (2025)

The data scraping tools market is growing significantly, valued at approximately $703.56M in 2024. The market is projected to grow more due to the increasing demand for real-time data collection across various industries.

Vytautas Savickas

Mar 28, 2025

6 min read

Equip Yourself With SERP Scraping API

Ready-to-use powerful scraping APIs – only at Smartproxy.

14-day money-back option