How to Bypass CAPTCHA With Puppeteer: A Step-By-Step Guide

Since their inception in 2000, CAPTCHAs have been crucial for website security, distinguishing human users from bots. They are a savior for website owners and a nightmare for data gatherers. While CAPTCHAs enhance website integrity, they pose challenges for those reliant on automated data gathering. In this comprehensive guide, we delve into the fundamentals of Puppeteer, focusing on techniques for CAPTCHA detection and avoidance using Puppeteer. We also explore strategies for how to bypass CAPTCHA verification, methods for solving CAPTCHAs with specialized third-party services, and the alternative solutions provided by our Site Unblocker.

What is a CAPTCHA?

CAPTCHAs (abbreviation of "Completely Automated Public Turing test to tell Computers and Humans Apart") are automated tests used on websites to determine whether a user is a human or a bot.

They aim to prevent spam and automated data extraction by ensuring only human users can access certain website functions. So, CAPTCHAs benefit website security but hinder individuals and businesses from performing automated data access and collection.

For website visitors, CAPTCHAs typically involve tasks like identifying distorted text, images, or solving puzzles. There are a few versions of reCAPTCHA, a free service provided by Google that protects websites from spam and abuse: the original version with distorted text, the "I’m not a robot" checkbox and Invisible reCAPTCHA in version 2, and the background scoring system without user interaction in version 3.

Challenges of dealing with CAPTCHAs

For businesses and individuals relying on web scraping for data collection, CAPTCHAs can halt their operations, requiring manual intervention to bypass them. This not only slows down the data collection process but also increases operational costs and complexity.

As CAPTCHAs have become more sophisticated, traditional methods can no longer reliably overcome them. Therefore, data collection requires more advanced solutions; for example, AI-driven CAPTCHA solvers or even human-powered solving services.

Introduction to Puppeteer

Puppeteer is a Node.js library developed by Google, which provides a high-level API to control headless Chrome or Chromium browsers. Essentially, it enables developers to programmatically perform actions in a web browser environment, as if a real user were navigating and interacting with websites.

With Puppeteer, developers can automate a wide range of browser interactions. This includes tasks like rendering web pages, capturing screenshots, generating PDFs of pages, and form submissions. It simulates real user actions: clicking buttons, filling out forms, and navigating from page to page.

Puppeteer is particularly effective for web scraping, automated testing of web applications, and performance monitoring. So, it’s an invaluable tool for developers looking to automate browser-based tasks and navigate around CAPTCHAs.

Getting started with Puppeteer

Let’s install Puppeteer and set up a basic project to get our feet wet. We’ll use a script to visit our specified website and perform some actions.

- First, install Node.js by downloading and running the installer according to your operating system.

- Then, create a new folder for your project and navigate into it using your command line or Terminal. To navigate into your folder, use the `cd` command followed by the path to your folder. For example, `cd Desktop/MyPuppeteerProject`.

- Initialize a Node.js project by running `npm init -y` to create a package.json file.

- Install Puppeteer by running the line `npm install puppeteer`.

- Next, create a JavaScript file using an IDE or a text editor. Copy and paste the Puppeteer script below, and save the file as index.js in your Puppeteer project’s folder.

const puppeteer = require('puppeteer');async function openWebPage() {const browser = await puppeteer.launch({ headless: "new" });const page = await browser.newPage();await page.goto('https://smartproxy.com/');// Extract and log page title with cyan colorconst title = await page.title();console.log("\x1b[36m%s\x1b[0m", "Page title:" + title); // Cyan color// Extract and log page content with yellow colorconst content = await page.$eval('*', el => el.innerText);console.log("\x1b[33m%s\x1b[0m", "Page content:" + content); // Yellow color// Capture and save a screenshot of the page's visible area at set dimensionsawait page.setViewport({ width: 1920, height: 1080 });await page.screenshot({ path: 'screenshot.png' });// Capture and save a screenshot of the full pageawait page.screenshot({ path: 'full_page_screenshot.png', fullPage: true });await browser.close();}openWebPage();

6. Finally, you can run your script with the `node index.js` command.

This script will launch a headless browser, open the webpage https://smartproxy.com/, display its textual content in your command prompt or Terminal, save a screenshot of the uppermost page area and the full page, and then close the browser.

Always make sure that your commands are typed and executed in the command line or Terminal while you’re in the directory of your project. This ensures that your installations and configurations are specific to your Puppeteer project.

Detecting CAPTCHAs with Puppeteer

The first step in handling CAPTCHAs with Puppeteer is identifying them on a webpage. Here’s an easy approach to detecting a CAPTCHA that you can incorporate into your script:

const page = await browser.newPage();await page.goto('https://example.com'); // Replace the example website with your target URLconst isCaptchaPresent = await page.evaluate(() => {return document.querySelector('.captcha-class') !== null; // Replace '.captcha-class' with the actual selector for the CAPTCHA element});

We’ll use the website https://www.google.com/recaptcha/api2/demo as our example. Although it’s not an ideal target because it always shows a CAPTCHA, let’s use it just as an exercise. When we inspect the webpage’s HTML structure, we find that the CAPTCHA class is "g-recaptcha". Then, the full script we can run will look something like this:

const puppeteer = require('puppeteer');async function run() {const browser = await puppeteer.launch();const page = await browser.newPage();await page.goto('https://www.google.com/recaptcha/api2/demo');const isCaptchaPresent = await page.evaluate(() => {return document.querySelector('.g-recaptcha') !== null;});console.log('Is CAPTCHA present:', isCaptchaPresent);// Capture and save a screenshot of the full pageawait page.screenshot({ path: 'full_page_screenshot.png', fullPage: true });await browser.close();}run().catch(console.error);

Since we’re targeting a website that always poses a CAPTCHA challenge, the result will indicate "Is CAPTCHA present: true". You’ll also find a screenshot in your project folder of the full page where you can see the detected CAPTCHA.

Once the detection mechanism is in place, the script automatically recognizes CAPTCHAs across multiple pages or sessions. This is particularly useful for large-scale scraping operations where manually checking each page isn't feasible. Some websites may not always present a CAPTCHA; it might only appear under certain conditions (like after multiple rapid requests). Automated detection allows the script to adapt to these dynamic scenarios, identifying CAPTCHAs whenever they appear.

In scenarios where manual intervention is required to solve a CAPTCHA, the detection step can trigger an alert to the user. This is particularly useful in semi-automated systems where user input is occasionally needed. In more complex scripts, CAPTCHA detection can be integrated with other conditional actions. For example, if a CAPTCHA is detected, the script might log this event, pause operations, or switch to a different task.

Unblock any target with Site Unblocker

Leave CAPTCHAs, geo-restrictions, and IP blocks behind with our proxy-like solution.

Bypassing CAPTCHAs with Puppeteer

CAPTCHA avoidance strategies

Mimicking human behavior to avoid triggering CAPTCHAs is a key strategy when using Puppeteer for web scraping or automation. Below, you’ll find some specific Puppeteer bypass CAPTCHA tactics, each accompanied by step-by-step instructions for implementation. Remember to replace placeholders like selector, text, your_user_agent_string, maxDelay, minDelay, etc., with actual values relevant to your script. These snippets are intended to be integrated into your existing Puppeteer script where appropriate.

- Randomize clicks and mouse movements. Use Puppeteer’s mouse movement functions to simulate human-like cursor movements. Instead of directly clicking on a target, move the cursor in a non-linear path to the button or link and then click.

await page.mouse.move(Math.random() * 1000, Math.random() * 1000);await page.mouse.click(Math.random() * 1000, Math.random() * 1000);

- Slow down actions. Rapid interactions are a red flag for automated behavior. Slowing down the script’s actions makes them appear more human-like, so you can achieve that by introducing delays between actions, such as clicks, form submissions, and page navigation. Use setTimeout or Puppeteer's built-in delay functions.

await page.waitForTimeout(Math.random() * (maxDelay - minDelay) + minDelay);

- Randomize interaction timings. Humans don't operate at consistent intervals, so randomizing your timing helps simulate human unpredictability. Instead of using fixed delays, randomize the time intervals between actions.

await page.type(selector, text, { delay: Math.random() * 100 });

- Use realistic user agents. Websites often check the user agent to identify bots. Using a realistic user agent helps blend in with regular traffic. Rotate the user agent of your Puppeteer browser frequently to mimic different real browsers and devices.

await page.setUserAgent('your_user_agent_string');

- Limit the rate of requests. It’s advisable to limit the rate of your requests, as excessive request rates are a common characteristic of bots and can trigger CAPTCHAs. Implementing rotating proxies can help make these requests appear from varied locations, reducing the risk of being flagged by the server.

// Between page navigations or requestsawait page.waitForTimeout(5000); // Adjust the time as needed

- Simulate natural scrolling. Automated scripts often jump directly to page sections, while humans tend to scroll through pages. It’s a good idea to implement smooth and varied scrolling behaviors on pages.

await page.evaluate(() => {window.scrollBy(0, window.innerHeight);});

- Handle cookies and sessions like a human. Present the script as a returning human user rather than a new, suspicious bot each session. Maintain cookies and session data across browsing sessions, and consider implementing occasional logins if the site requires authentication.

// To save cookiesconst cookies = await page.cookies();// Save cookies to a file or database// To set cookies in a new sessionawait page.setCookie(...cookies);

Implementing these strategies requires a balance between making the script efficient and making it appear human-like. Overdoing these tactics can significantly slow down your automation, so find a middle ground that suits your specific use case.

The Stealth extension to bypass CAPTCHAs

While the above suggestions offer more control and customization, they require more effort. You can try the Stealth extension for a more straightforward approach. Puppeteer Stealth is an extension that significantly enhances Puppeteer’s ability to mimic human-like browsing, making it difficult for websites to distinguish between a bot and a human user.

- Begin by installing the necessary packages in your command prompt or Terminal:

npm install puppeteer-extra puppeteer-extra-plugin-stealth

2. In your Node.js script, include the required modules:

const puppeteerExtra = require('puppeteer-extra');const StealthPlugin = require('puppeteer-extra-plugin-stealth');puppeteerExtra.use(StealthPlugin());

3. Create a headless browser instance and navigate to your target URL. The setUserAgent method is crucial as it disguises your bot as a regular browser, helping to bypass certain anti-bot measures. You might want to change this string depending on the specific browser or device profile you want to mimic.

(async () => {const browser = await puppeteerExtra.launch();const page = await browser.newPage();await page.setViewport({ width: 1280, height: 720 });await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36');await page.goto('https://www.whatsmyua.info/');await page.waitForTimeout(10000); // 10-second delayawait page.screenshot({ path: 'screenshot.png' });await browser.close();})();

Apart from the user agent string, don’t forget to replace the https://www.whatsmyua.info/ URL with your target website’s URL. You can also modify the viewport dimensions and the 10000 (10 seconds) delay time to something else.

If your screenshot captures the website’s content without triggering a CAPTCHA, your setup was successful! However, be aware that Puppeteer Stealth might not work on all websites, especially those with advanced anti-bot mechanisms.

Bypassing CAPTCHAs with Site Unblocker

In case the CAPTCHA avoidance strategies and the Stealth extension don’t cut it for your targets or just seem like too much of a headache, we recommend trying our Site Unblocker to bypass CAPTCHAs.

Site Unblocker is an advanced proxy solution that integrates as a proxy yet lets you gather data from websites with even the most sophisticated anti-bot systems. This tool has automatic proxy rotation and pool management, browser fingerprinting, JavaScript rendering, and other features to avoid CAPTCHAs, IP bans, geo-blocking, and other challenges. Here’s how you can set it up:

- Create a Smartproxy account on our dashboard.

- Click on Site Unblocker on the left panel and choose a subscription plan that suits your needs.

- Then, go to the Proxy setup tab to set up your proxy user credentials.

- You can go to the API Playground tab to send requests, save results, and copy the cURL command.

- Explore our detailed documentation for sample code and further insights on available parameters, including integration examples in cURL, Python, and Node.js.

Solving CAPTCHAs with Puppeteer

We’ve discussed bypassing CAPTCHAs, which involves avoiding the triggering of CAPTCHA challenges in the first place. But what about solving them? Optical Character Recognition (OCR), machine learning, and third-party services offer potential solutions, each with advantages and drawbacks.

OCR technology converts documents, such as scanned paper documents or images captured by a digital camera, into editable and searchable data. It’s great for simple CAPTCHAs and is accessible for developers, but it struggles with complex CAPTCHAs and may not always provide accurate results.

Machine learning, particularly deep learning models, can be trained to solve more complex CAPTCHAs, including image-based challenges. These models can be trained and retrained on new CAPTCHA types as they evolve. However, training models require significant computational resources and a large dataset of CAPTCHA examples.

Third-party CAPTCHA-solving services employ human solvers or advanced algorithms to solve CAPTCHAs on behalf of clients for a fee. This option offers high accuracy and ease of use via an API that can be integrated into automation scripts. The drawbacks include the cost of such services, which can add up, especially for large-scale operations. If incorporating a third-party service with Puppeteer to solve CAPTCHA sounds right, go ahead!

Best practices with Puppeteer

When using Puppeteer for web scraping, it's essential to approach the task with respect for the websites you interact with. Adhering to best practices not only ensures the sustainability of your scraping activities but also respects the legal and ethical boundaries set by website owners. Here are some things to keep in mind when using Puppeteer:

- Adherence to rules. Always read and respect the terms of service of any website you scrape. Many websites explicitly prohibit automated data extraction, and ignoring these terms can lead to legal consequences and being permanently banned from the site.

- Rate limiting. Avoid overwhelming your target website’s server so you don’t get rate-limited. This means making requests at a reasonable interval, mimicking human browsing patterns rather than rapid, constant scraping.

- Selective data extraction. Be selective about the data you scrape. Extract only what you need, reducing the load on the website’s server and the amount of data you need to process.

- Use APIs when they are available. If the website offers an API for accessing data, use it. APIs provide a more efficient and often legally sanctioned way to access the data you need.

- User-agent string. Set a legitimate user-agent string in your Puppeteer script. This transparency can help in situations where a website might block unknown or suspicious user-agents.

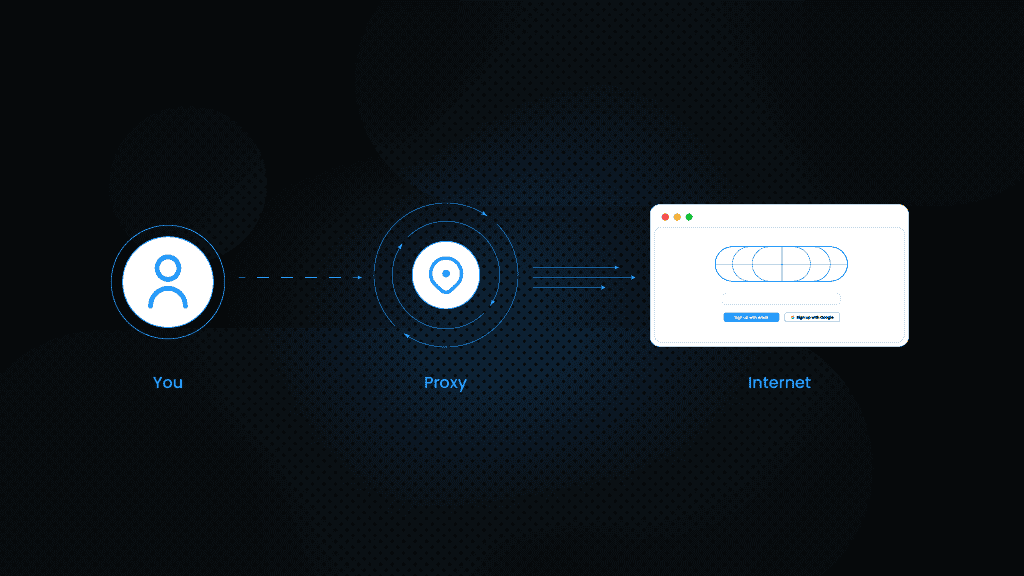

- Integrate proxies. Incorporating proxies into your Puppeteer projects can significantly enhance data-gathering efficiency. Proxies allow for IP rotation and request distribution, reducing the risk of being blocked while maintaining a respectful load on target servers.

Use cases and applications

There are plenty of practical use cases where businesses and developers might find legitimate benefits in using Puppeteer for CAPTCHA challenges. Here are some of them:

- Competitive analysis. Businesses can leverage web scraping for such market intelligence as pricing, product offerings, and consumer reviews from publicly accessible websites. Pages with the most valuable information often incorporate CAPTCHAs or other methods to prevent automatic data collection. Puppeteer allows automation of this process and helps bypass these restrictions to gather real-time data, enabling them to make informed decisions based on current market trends. With this approach, businesses can stay agile and responsive to market shifts in dynamic industries where prices and product offerings change frequently.

- Academic and market research. Researchers in academic and market research fields often require access to extensive datasets from various online sources. Web scraping with Puppeteer allows access to these websites, some of which might be protected by CAPTCHAs. This capability is crucial for gathering a broad spectrum of data, ranging from social media trends and public opinion to economic indicators and demographic statistics.

- Testing web applications and user experience. Developers can use Puppeteer to automate testing of their own web applications, including those that implement CAPTCHAs. This helps ensure the CAPTCHA implementation doesn’t obstruct user experience and functions as intended.

Final thoughts

Our exploration into Puppeteer’s capabilities reveals a versatile toolkit for CAPTCHA detection, avoidance, and solution. Basic CAPTCHA avoidance strategies can be helpful for some websites but might not suffice for others. Meanwhile, advanced techniques like employing OCR, machine learning, and third-party services can effectively solve complex CAPTCHAs at a cost.

Unlike third-party CAPTCHA-solving services, Site Unblocker can provide a more streamlined and cost-effective approach to bypassing CAPTCHAs for enhanced web scraping and automation efficiency. May your online adventures be CAPTCHA-free!

About the author

Dominykas Niaura

Technical Copywriter

Dominykas brings a unique blend of philosophical insight and technical expertise to his writing. Starting his career as a film critic and music industry copywriter, he's now an expert in making complex proxy and web scraping concepts accessible to everyone.

Connect with Dominykas via LinkedIn

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.