Alternative Google SERP Scraping Techniques - Terminal and cURL [VIDEO]

Google has become a gateway to easily-accessible information. And one of the best ways to make use of Google’s limitless knowledge is data scraping. We’ve just released a detailed blog post about scraping Google SERPs with Python, where we cover lots of useful info, including the technical part. So before you dive into this tutorial – check it out.

But what if Python is not exactly your forte? This blog post will show you how to scrape SERPs using a simpler method. One that doesn't require much tech knowledge or downloading loads of applications and software. So, what do you know about web scraping with cURL and Terminal?

Defining cURL and Terminal

cURL

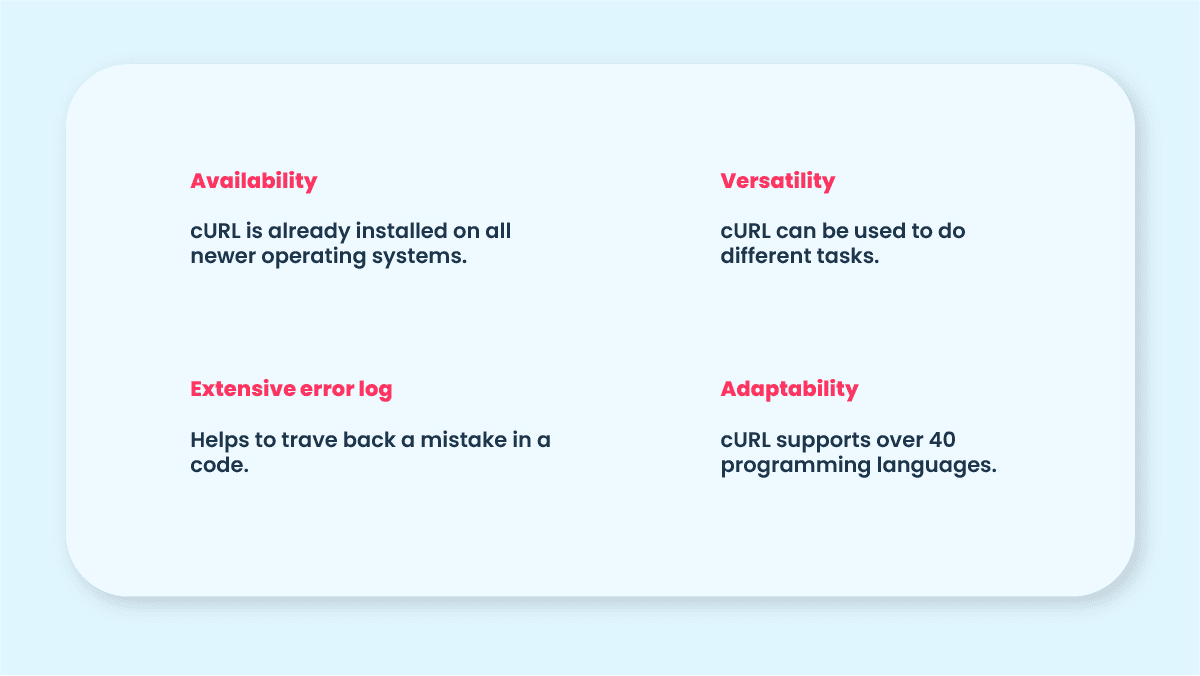

cURL or client URL (also written as curl) is a neat piece of free and open-source software that allows you to collect and transfer data by using the URL syntax. cURL is a widely used tool, popular for its flexibility and adaptability – it can run on almost all platforms.

This software also supports more than 20 protocols (HTTP, HTTP(S), etc.), proxies, SSL connections, FTP uploads – you name it. You can even use cURL to download images from the internet or authenticate users. It’s a pretty versatile tool.

If you’re looking to get knee-deep in cURL knowledge, we have a great blog post that covers it all. From history to usage and installation (only if needed, all new Mac OS, Linux, and Windows already have it installed), everything is there – check it out.

Advantages of using cURL

Terminal

You probably already know a thing or two about it or have heard about it, at least. But to define it – Terminal (a.k.a. console and command line) is an interface in Mac OS and Linux that lets us automate various tasks without using a GUI (graphic user interface).

In the most basic sense, you can give Terminal text-based commands to accomplish tasks, initiate programs, open documents, manipulate and download files. The main benefit of using a Terminal is that it’s much faster to do certain tasks than using a GUI.

Why cURL and Terminal?

For starters, it doesn’t require much tech knowledge. Also, both cURL and Terminal are already on your Mac and Linux, so you don’t have to worry about downloading additional tools. If, however, you have an older version that doesn’t have cURL – you can easily download it at https://curl.se/download.html.

Scraping Google search results with cURL in terminal: tutorial

Finally, action time! In this tutorial, we’ll go step-by-step, guiding you throughout the process. You’ll not only learn how to use cURL, but you’ll also have a better understanding of its versatility and simplicity when dealing with smaller tasks. Oh, right – you’ll also learn a bit about web scraping!

What you’ll need to get started with this tutorial

Before we jump into the tutorial, let’s go over some of the most important things you’ll need for this tutorial. First things first: here are some basic terms and input elements for a web scrape request with cURL:

- Post. A simple command used to send information to a server.

- Target. Indicates where information will be retrieved from.

- Query. With this, you’re going to specify what kind of information you’re after.

- Locale. Interface language.

- Results language. It means just that – the language in which the results of your query.

- Geographical location. Specifies the location you want to target.

- Header. Contains information about the request: user agent, content type, etc.

You’ll also need a SERP API. Scraping Google search engine results pages can be a challenge without a proper API. Smartproxy has SERP Scraping API – it’s a great set of magical tools (a proxy network, scraper, and parser) that will help you kick off your web scraping project.

With our software, you’ll not only have access to a user-friendly API, but you’ll also get reliable proxies that will keep those pesky CAPTHAs at bay. It might seem a little overwhelming at first; that’s why we also have detailed documentation to help you better understand and use our product. But as far as this tutorial is concerned, you won’t need to use our SERP Scraping API that much.

Step 1: Getting started

First things first, you’ll want to sign up to our dashboard. It’s super easy and takes no time at all. Once you’ve gone through the whole process of creating an account and verifying it, go ahead and log in.

In the menu on your left, click on “SERP” (under the “Scraping APIs” section) and subscribe to a plan of your choosing. Then, choose the user:pass authentication method and create a user. After that, you’re pretty much all set!

Oh and if you’re having trouble or you’d simply like to learn more about our API, check out our documentation. There you’ll find detailed information that almost literally covers it all.

Step 2: Accessing Terminal

Now that you have access to Smartproxy’s SERP API, it’s finally time to start scrapin’. If you’re using Mac OS, head over to ‘finder’ and search for Terminal. For Linux users, you can either press CTRL+alt+T or click on “Dash” and type in Terminal.

Step 3.1: Let’s start cURL’ing

It’s time to enter your first line of code! Don’t worry, there’s actually not a lot of it. And to make it as clear as possible, we’ll go line by line, so you know exactly what’s going on. The line you’re about to enter will create a request to send information:

curl --request POST \

Press ‘Control+Enter’ and then add another line of code that specifies the endpoint. The link used here will always be the same no matter the scraping request. Just go ahead and re-use it for future requests.

--url https://scrape.smartproxy.com/v1/tasks \

Add another header line – it will specify the format of received information after this request:

--header 'Accept: application/json' \

It’s time to show some credentials – the username and password you created for your proxy user. But you can’t just write it as it is, you’ll have to encode it first.

You can do that straight from your Terminal. This command below sends a request to an encoding website and it will return your encoded credentials. Before you start this tutorial, type in this code line and you’ll get an encoded version of your login information.

For this tutorial, we encoded “user:pass” which looks like this: dXNlcjpwYXNz. Yours, of course, will be different.

echo -n ‘user:pass’ | openssl base64

Now that you have your credentials, let’s add another header that will let you access our API.

--header 'Authorization: dXNlcjpwYXNz' \

Next up – we’ll write a code line that will specify the format of the parsed information you’ll get after this request is completed.

--header 'Content-Type: application/json' \

And finally, with the code line below, we’ll indicate that we’re about to add the details of our request.

--data '{

Step 3.2: Getting what you need

You’re halfway there! Now comes the fun part. We’re going to specify what kind of information we want, where we want to get it from, what language and locale to scour. We’ll also make sure to have the information parsed. Otherwise, you’ll get a block of messy text filled with letters, numbers, and symbols.

For the purpose of this tutorial let’s say we’re looking for the best pizza in London. Go ahead and type in the rest of the code for this request:

"target": "google_search","query": "best pizza","parse": true,"locale": "en-GB","google_results_language": "en","geo": "London,England,United Kingdom"}'

It doesn’t seem that overwhelming now that we got it all broken down line by line, right? Now that we have our whole request, it should look like this (you can totally copy-paste this straight to your Terminal. Just don't forget to change the authorization header.):

curl --request POST \--url https://scrape.smartproxy.com/v1/tasks \--header 'Accept: application/json' \--header 'Authorization: dXNlcjpwYXNz' \--header 'Content-Type: application/json' \--data '{"target": "google_search","query": "best pizza","parse": true,"locale": "en-GB","google_results_language": "en","geo": "London,England,United Kingdom"}'

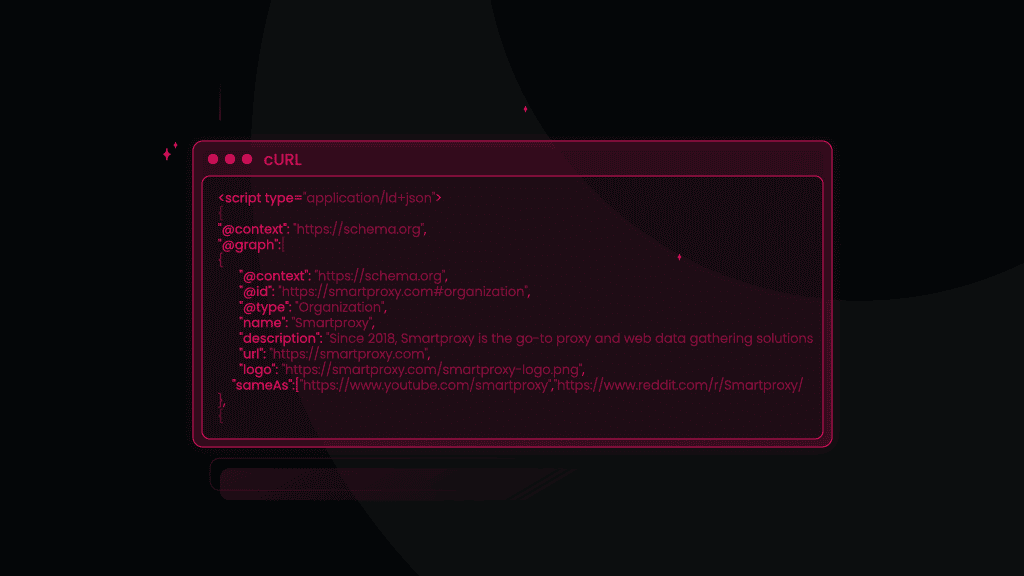

There, your request is complete! After a couple of seconds, you should get the information you requested in a neatly parsed format. All that’s left to do is go through the provided links and find out where that one and only pizza is at.

{"results":[{"content":{"url": "https://www.google.com/search?q=best+pizza&lr=lang_en&nfpr=1&hl=en","page": 1,"results":{"paid":[],"organic":[{"pos":1,"url": "https://www.cntraveller.com/gallery/best-pizza-london","desc": "15 Jul 2021 \u2014 The best pizza in London 2021 \u00b7 Voodoo Rays. Voodoo Ray's. DALSTON. It isn't just the pizzas that are New York-style here: the neon signage,\u00a0...","title": "The best pizza in London 2021 | CN Traveller","url_shown": "https://www.cntraveller.com\u203a ... \u203a Eating & Drinking","pos_overall": 7},

Wrapping up

And there we have it – you’ve just successfully web scraped! Granted, it might still seem a little much, but we all have to start somewhere. With a bit of patience and practice, you’ll soon be taking on big web scraping projects.

Hopefully, now you have a better understanding of how web scraping works and how beneficial it is to gather data, compare and analyze it. Try out this Google SERP scraping technique with other requests and start getting results!

About the author

Mariam Nakani

Say hello to Mariam! She is very tech savvy - and wants you to be too. She has a lot of intel on residential proxy providers, and uses this knowledge to help you have a clear view of what is really worth your attention.

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.