How to Leverage ChatGPT for Effective Web Scraping

Artificial intelligence is transforming various fields, ushering in new possibilities for automation and efficiency. As one of the leading AI tools, ChatGPT can be especially helpful in the realm of data collection, where it serves as a powerful ally in extracting and parsing information. So, in this blog post, we provide a step-by-step guide to using ChatGPT for web scraping. Additionally, we explore the limitations of using ChatGPT for this purpose and offer an alternative method for scraping the web.

What is ChatGPT?

Developed by OpenAI, ChatGPT (Chat Generative Pre-trained Transformer) is a language model trained on a diverse dataset, enabling it to understand and generate human-like text based on input.

Its various capabilities make it an invaluable asset for professionals and enthusiasts who seek to leverage AI for various tasks. ChatGPT opens up new avenues for efficient and sophisticated web scraping strategies as it simplifies the process of web scraping and enhances the quality of the data collected by minimizing errors.

ChatGPT can be an excellent tool for web scraping because it enables anyone to jump in without being familiar with coding, speeds up the process of creating scripts, and allows customization to get exactly the data you need.

How to use ChatGPT for web scraping

ChatGPT can’t directly scrape web data; it can only access URLs by using the browser tool within its capabilities on the GPT-4 model and summarize the content of webpages. However, this tool serves as a valuable assistant for web scraping tasks by helping to produce scripts and algorithms tailored to specific data extraction needs.

Users must provide detailed prompts with the necessary information for ChatGPT to generate effective web scraping code. Then, the code can be repeatedly tested and refined using ChatGPT until it evolves into an optimally functioning script.

In our case, we can build a price monitoring code that could be part of a market research or price aggregation project. Follow our step-by-step guide to leveraging ChatGPT for your web scraping needs below.

Locate the elements to scrape

Before we start scraping, we need to choose a website that holds the data that interests us and identify which elements of the web page we want to collect. This involves inspecting the HTML structure of the website to find the tags, classes, or IDs associated with the data we’d like to extract.

Let’s scrape the sample website Books to Scrape. Say we’d like to retrieve the book titles and prices in the philosophy category. Our target URL would then be this: https://books.toscrape.com/catalogue/category/books/philosophy_7/index.html

To find the specific location of the book titles and prices or other elements you’re after, use your browser’s developer tools to inspect the webpage. Right-click the element you want to get information about and select Inspect (on Google Chrome and Microsoft Edge) or Inspect Element (on Mozilla Firefox and Safari) from the context menu. It will lead you to the exact location in the page’s HTML structure, from which you can right-click and copy the CSS selector. Here’s what we get in our case:

- Title: #default > div.container-fluid.page > div > div > div > section > div:nth-child(2) > ol > li:nth-child(1) > article > h3 > a

- Price: #default > div.container-fluid.page > div > div > div > section > div:nth-child(2) > ol > li:nth-child(1) > article > div.product_price > p.price_color

- Titles: ol > li > article > h3 > a

- Prices: ol > li > article > div.product_price > p.price_color

These selectors target only the first book title and price on the list due to the use of "li:nth-child(1)" which specifies the first child element. We can remove this to target all books and further simplify the string by removing the path to the ol element (since it’s the only one of its type in the HTML file) to make a neat little path that will be sufficient for the script to find the data we’re looking for:

Crafting a prompt for ChatGPT

Now that we know what we need to scrape, we can turn to ChatGPT to assist in generating the web scraping code. Crafting a precise and detailed prompt is crucial for getting useful output.

For this step, we need an OpenAI account to access ChatGPT, so sign up for an OpenAI account if you haven’t already.

Let’s describe our task to ChatGPT, including the coding language, target URL, elements we identified, and how we want it to handle the output. In this example, we’re using Python together with the Requests and BeautifulSoup libraries. For your case, you might want to include specific requirements in the prompt, such as handling pagination or dealing with dynamic content. The prompt could look similar to this:

Write a Python script using the Requests and BeautifulSoup libraries to scrape book titles and their prices from: https://books.toscrape.com/catalogue/category/books/philosophy_7/index.htmlUse the following CSS selectors for scraping:Titles: ol > li > article > h3 > aPrices: ol > li > article > div.product_price > p.price_colorEnsure the script correctly handles character encoding to prevent issues with special characters.The script should print the results and save them to a CSV file in the script's directory.

Here’s the code provided by ChatGPT:

import requestsfrom bs4 import BeautifulSoupimport csv# URL to scrapeurl = 'https://books.toscrape.com/catalogue/category/books/philosophy_7/index.html'# Send GET requestresponse = requests.get(url)# Ensure correct character encodingresponse.encoding = 'utf-8'# Parse HTML contentsoup = BeautifulSoup(response.text, 'html.parser')# CSS selectors for the datatitle_selector = 'ol > li > article > h3 > a'price_selector = 'ol > li > article > div.product_price > p.price_color'# Find data using selectorstitles = soup.select(title_selector)prices = soup.select(price_selector)# Extracting text from the tagsbooks = [(title['title'], price.text) for title, price in zip(titles, prices)]# Print resultsfor title, price in books:print(f'Title: {title}, Price: {price}')# Save results to CSVcsv_file = 'books.csv'with open(csv_file, mode='w', newline='', encoding='utf-8') as file:writer = csv.writer(file)writer.writerow(['Title', 'Price'])for book in books:writer.writerow(book)print(f'Data saved to {csv_file}')

This script does the following:

- Sends a GET request to the URL.

- Parses the HTML content using BeautifulSoup with the specified CSS selectors.

- Extracts the book titles and their prices.

- Prints the results.

- Saves the results to a CSV file in the script’s directory.

Prepare your coding environment

Ensure you’ve got a coding environment where you can write and execute scripts. Namely, you could use Jupyter Notebook, an IDE like Visual Studio Code, or a simple text editor with a command-line tool, which we shall use for this guide.

Make sure you have Python installed on your system. Then, prepare your coding environment by installing the necessary packages for web scraping. This time, we’ll use two:

- Requests – a library that simplifies HTTP requests, enabling efficient web content retrieval and interaction with APIs. It's an essential tool for web scraping and data access projects.

- BeautifulSoup – a parsing library that makes it easy to parse the scraped information from web pages, allowing for efficient HTML and XML data extraction.

You can install the packages using this command via command line:

pip install requests beautifulsoup4

Test, review, and repeat

The final step is to run the code we’ve prepared. Open a text editor, paste the ChatGPT-provided code, and save it as a "code.py" file.

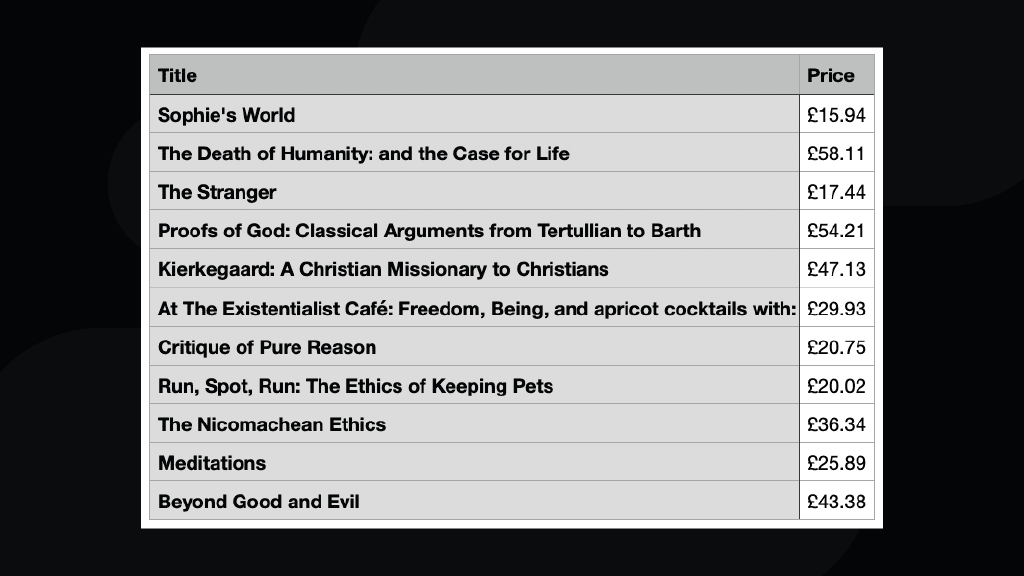

In the command-line tool, all that’s left is to run the script using "python code.py" command. Here’s how the result looks saved in a CSV file:

If any errors or issues arise during the execution of the code, you can always consult ChatGPT to solve any roadblocks.

Check the output to ensure that the collected data matches your expectations. This might involve checking for completeness, accuracy, and potential data formatting issues.

Based on the test results, you may need to adjust your script or ChatGPT prompt and run the process again. You can even ask ChatGPT to proofread your prompt so that you get the optimal code for your case. Repeat the process as necessary.

Unblock any target with Site Unblocker

Leave CAPTCHAs, geo-restrictions, and IP blocks behind with our proxy-like solution.

Limitations of ChatGPT web scraping

While ChatGPT is a powerful tool to facilitate the creation of web scrapers, it’s crucial to understand its limitations. These limitations aren’t necessarily due to ChatGPT’s capabilities but stem from the nature of web scraping itself and the environment in which it operates. Here are a few challenges that ChatGPT encounters in web scraping.

Anti-scraping technology

Many websites implement anti-scraping technology to protect their data from being harvested. ChatGPT can help draft code to navigate some obstacles, but there are numerous complex anti-scraping measures, such as CAPTCHAs, rate limiting, IP bans, and JavaScript rendering.

- Dynamic content and JavaScript. ChatGPT may struggle to provide solutions for websites that heavily rely on JavaScript to render content, as it’s unfamiliar with how the site functions and how elements are loaded

- Advanced anti-scraping technologies. Some websites use sophisticated detection methods to distinguish bots from human users. Bypassing these measures may require advanced techniques beyond ChatGPT’s current advising capacity, such as using headless browsers to mimic human interaction patterns.

- Frequent updates. Maintaining a scraper involves regular updates to the code to accommodate changes in the target website’s structure or anti-scraping measures.

- Scalability. Scaling up your operations to scrape more data or sites can introduce complexity. ChatGPT may offer code optimization suggestions, but managing a large-scale scraping operation requires robust infrastructure and efficient data management strategies beyond ChatGPT’s advisory scope.

- Session management and cookies. Managing sessions and cookies to maintain a logged-in state or navigate multi-step processes can be challenging. ChatGPT might provide a basic framework, but the nuances of session handling often require manual tuning.

- Interactive elements and forms. Dealing with CAPTCHAs, interactive forms, or dynamically generated content based on user actions can present significant hurdles. ChatGPT’s advice in such situations might need supplementation with more sophisticated, tailored solutions.

Maintenance and scalability

All web scraping scripts require regular maintenance to ensure their continued effectiveness. Websites often change their structure, which can break a scraper’s functionality.

High complexity

Some web scraping tasks involve complex navigation through websites, session management, or handling forms and logins. While ChatGPT can generate code snippets for straightforward tasks, it’s limited when it comes to complex scraping projects.

ChatGPT web scraping alternative

When relying on ChatGPT no longer suffices for your web scraping needs, consider adding a specialized solution to your toolkit. At Smartproxy, we recommend our Site Unblocker for this purpose.

Site Unblocker is a comprehensive scraping tool designed to effortlessly extract public web data from even the most challenging targets. It eliminates concerns about CAPTCHAs, IP bans, or anti-bot systems and delivers the data in HTML with JavaScript.

Site Unblocker streamlines the data collection process by providing access to a vast network of 65M+ proxies with 195+ worldwide geo-targeting options. It supports JavaScript rendering and advanced browser fingerprinting, ensuring a 100% data retrieval success rate.

To sum up

You’ve now learned the basic principles of employing ChatGPT for web scraping! By leveraging the flexibility and power of AI tools, we’ve seen that it’s possible to significantly enhance the effectiveness and efficiency of our data collection efforts.

Sometimes, however, even the best practices aren’t enough without the right tools. That’s why we recommend exploring our Site Unblocker – a solution that transforms the complexity of web scraping into a seamless experience, eliminating CAPTCHAs, IP bans, and other obstacles. Try it out for yourself!

About the author

Dominykas Niaura

Technical Copywriter

Dominykas brings a unique blend of philosophical insight and technical expertise to his writing. Starting his career as a film critic and music industry copywriter, he's now an expert in making complex proxy and web scraping concepts accessible to everyone.

Connect with Dominykas via LinkedIn

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.