Web Scraping in R: Beginner's Guide

As a data scientist, you’re already using R for data analysis and visualization. But what if you could also conveniently use it to gather data directly from websites? With the R programming language, you can seamlessly scrape static pages, HTML tables, and even dynamic content. Let’s explore how you can take your data collection to the next level!

What is web scraping?

Web scraping is the process of extracting data from websites in an automated way. For data scientists and developers using R, it’s a powerful technique for gathering structured and unstructured data from the web. Using specialized packages to scrape any type of content, you can collect, clean, and analyze data efficiently. This makes web scraping essential for tasks like market research, sentiment analysis, and machine learning.

Why use R for web scraping?

R is an excellent choice for web scraping because it lets you extract, clean, and analyze data in one place. Here's a list of features why you should consider R:

- Seamless workflow. R allows you to scrape data, process it, and perform analysis all within the same environment, saving you time and effort compared to switching between multiple tools or programming languages.

- Strong data handling. R’s robust data manipulation capabilities, such as filtering, reshaping, and summarizing data, make it easy to clean and transform raw web data into usable formats for analysis.

- Integrated visualization. After scraping data, you can directly visualize it using R’s powerful plotting libraries like ggplot2, making it easier to uncover insights without exporting data.

- Reproducibility. R's scripting and markdown capabilities let you automate web scraping tasks and document your process, ensuring that your analysis can be easily repeated and shared for future use.

R also features many great web scraping packages, such as rvest, httr, and jsonlite. Many users rely on rvest to extract data from static HTML pages, allowing you to navigate and parse web content quickly. Meanwhile, httr helps manage HTTP requests, enabling you to interact with websites, handle cookies, and work with APIs. For dealing with JSON data, jsonlite simplifies the process of parsing and converting JSON responses into R-friendly formats. Together, these packages offer a comprehensive toolkit for web scraping in R.

Tools and packages for web scraping in R

To get started with web scraping in R, you'll need to take care of some prerequisites. Follow these steps to install the necessary tools:

- Install R and RStudio. Ensure you have both R and RStudio installed on your computer. R is the programming language, while RStudio is an integrated development environment (IDE) to make your coding experience smoother.

- Launch RStudio. Once installed, open the application. If it's your first time using RStudio, familiarize yourself with the UI and the various tools and features the IDE offers.

- Create a new script file. Create a new R script file to begin writing your code (File → New File → R Script). Name it anything you like and save it.

- Install the rvest package. You’ll need the rvest package to scrape static web pages. To install it, simply type the following command in the RStudio console:

install.packages("rvest")

That's the basic setup you need to start scraping with R. With these tools in place, you're all set to dive into web scraping and begin extracting data from websites.

Getting started with web scraping in R

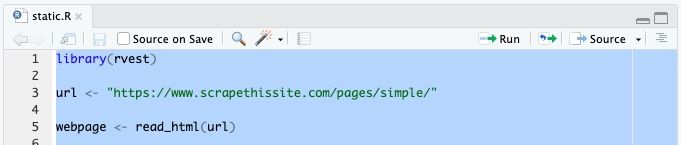

To scrape the web with R, you need to create a script that outlines the entire process. Here’s a step-by-step guide to writing the script:

- Import the package. Load the package that you've installed earlier into the first line of your script.

library(rvest)

2. Choose a website. Create a URL object followed by the website you want to scrape. For this example, let's target a website made to practice web scraping.

url <- "https://www.scrapethissite.com/pages/simple/"

3. Read the HTML. Use the read_html() function to scrape the HTML of the target website.

webpage <- read_html(url)

4. Select the nodes. To determine the correct node, use the Inspect Element function in your browser. You'll need to find the ID or the class name of the element that matches the content you need. For example, if you wanted to select the country name, you'd need to select the ".country" class. Don't write this in the code just yet, but here's the basic structure to understand how it's done:

web_data <- webpage %>%html_nodes(".country")

5. Create a data frame. For more meaningful results, you'll also want to get the capital, population, and area size in addition to the country name. For that, you'll need to create an empty list to store the scraped data:

results_list <- list()

6. Loop through the data. Start a for loop to process the data using the method described in step 4. Here, for each country interaction, html_node selects different class elements, and html_text cleans the output.

for (country in web_data) {country_name <- country %>%html_node(".country-name") %>%html_text(trim = TRUE)capital_name <- country %>%html_node(".country-capital") %>%html_text(trim = TRUE)population_data <- country %>%html_node(".country-population") %>%html_text(trim = TRUE) %>%as.numeric()area_data <- country %>%html_node(".country-area") %>%html_text(trim = TRUE) %>%as.numeric()

7. Bind the data to the data frame. Finish the loop by binding the extracted data to the empty data frame from step 5. This way, you'll ensure that the data is formatted into a neat table.

# Add the data as a list elementresults_list <- append(results_list, list(data.frame(Country = country_name,Capital = capital_name,Population = population_data,Area = area_data)))}# Combine all list elements into a single data frameresults <- do.call(rbind, results_list)

8. Print the results. Finally, check if everything works correctly by printing the results into the console.

print(results)

9. Save the results. For a clean and easy way to view the data, save the results in a CSV format.

write.csv(results, "scraped_countries_data.csv", row.names = FALSE)

10. Run the script. To run the script, select the entire script (Ctrl + A or Command + A) and click Run, which is located in the top-right corner of the script window.

Here's the full script:

library(rvest)url <- "https://www.scrapethissite.com/pages/simple/"webpage <- read_html(url)# Initialize an empty list to store the scraped dataresults_list <- list()for (country in web_data) {country_name <- country %>%html_node(".country-name") %>%html_text(trim = TRUE)capital_name <- country %>%html_node(".country-capital") %>%html_text(trim = TRUE)population_data <- country %>%html_node(".country-population") %>%html_text(trim = TRUE) %>%as.numeric()area_data <- country %>%html_node(".country-area") %>%html_text(trim = TRUE) %>%as.numeric()# Add the data as a list elementresults_list <- append(results_list, list(data.frame(Country = country_name,Capital = capital_name,Population = population_data,Area = area_data)))}# Combine all list elements into a single data frameresults <- do.call(rbind, results_list)# Print the resultsprint(results)# Save the data to a CSV filewrite.csv(results, "scraped_countries_data.csv", row.names = FALSE)

Advanced web scraping techniques in R

Scraping HTML tables

Some websites, such as Wikipedia, have easy-to-read information in HTML tables. These are very convenient for scraping as they only require a few lines of code. Here's what the process looks like:

- Import the rvest package. You'll need to use the rvest package for easy HTML download and manipulation.

library(rvest)

2. Define the URL. As with static pages, start by defining the URL object. As an example, let's use a table from the Wikipedia article that lists the Eurovision Song Contest winners.

url <- "https://en.wikipedia.org/wiki/List_of_Eurovision_Song_Contest_winners"

3. Read the contents. Get the full HTML of the page and assign it to the webpage object.

webpage <- read_html(url)

4. Locate the table. Identify the table's ID or class name. In this case, the target table has the class ".wikitable". Since it's the first table in the document, R will automatically select it without needing further specification. Then, use the html_table() function to parse the table into the variable.

winners_table <- webpage %>%html_node(".wikitable") %>%html_table()

5. Print the information. Optional, but you can include this line to see the results in the RStudio console.

print(winners_table)

6. Save it as a CSV file. It's a simple and widely supported format that can be easily read by humans and computers alike.

write.csv(winners_table, "eurovision_winners.csv", row.names = FALSE)

Here's the full code:

library(rvest)url <- "https://en.wikipedia.org/wiki/List_of_Eurovision_Song_Contest_winners"webpage <- read_html(url)winners_table <- webpage %>%html_node(".wikitable") %>%html_table()print(winners_table)write.csv(winners_table, "eurovision_winners.csv", row.names = FALSE)

Scraping dynamic content

Many modern websites use JavaScript to load content dynamically, meaning traditional scraping methods may not get all the data. To handle this, you need a way to render the page like a real browser before extracting the content.

R offers support for various libraries, such as tidyverse, which can render dynamic content. Here's how you can implement it into your script:

1. Install tidyverse. Get the required library by running the following command in the RStudio console:

install.packages("tidyverse")

2. Define the target website. In this scenario, use the "Quotes to Scrape" site – another sandbox for practicing web scraping.

base_url <- "https://quotes.toscrape.com"

3. Create a scraping function. Define a new function, scrape_page, that will hold the logic of how to scrape a page.

scrape_page <- function(base_url) {

4. Read the HTML contents. Get the HTML contents of the URL.

page <- read_html(base_url)

5. Extract quotes and authors. Select and extract the quotes using CSS selectors. Like before, we find the required HTML nodes by inspecting the page via developer tools. The same process is repeated for quote authors.

quotes <- page %>%html_nodes(".quote .text") %>%html_text(trim = TRUE)authors <- page %>%html_nodes(".quote .author") %>%html_text(trim = TRUE)

6. Extract tags. Repeat the process for quotes. However, since each quote has multiple tags, we use the gsub function to remove the prefix "Tags" and separate each data point with commas. As a result, we'll get all tags from the same quote in one table row.

tags_per_quote <- page %>%html_nodes(".quote .tags") %>%html_text(trim = TRUE) %>%gsub("Tags: ", "", .)

7. Create a data frame. Create an empty data frame to store the scraped data. Finally, the function ends by returning the data frame as the result.

all_quotes <- data.frame(Quote = quotes,Author = authors,Tags = tags_per_quote)return(all_quotes)}

8. Implement pagination. Since many JavaScript pages have pagination, add code to go through multiple pages and extract the data from each. With the next_page object, we initialize the pagination process by starting with the first page. page_count sets a counter to keep track of the page number.

next_page <- "/"page_count <- 1

9. Initialize the data frame. It's similar to the one in step 7 but not contained within a function, and it also defines the data type.

all_quotes <- data.frame(Quote = character(),Author = character(),Tags = character(),stringsAsFactors = FALSE)

10. Set a loop. Loop through pages as long as there's a next page and the page count is less than or equal to 10. You can scrape more or fewer pages by adjusting the number.

while (!is.null(next_page) && page_count <= 10) {

11. Combine the base URL with the relative path. To create a full URL, you'll need to combine the base URL with the path that defines which page should be shown. Currently, it will be the first page, as next_page has the "/" value. It’ll change with step 13.

current_url <- paste0(base_url, next_page)message("Scraping page ", page_count, ": ", current_url)

12. Repeat the scraping function. Call the previously established scrape_page function to extract data from the current page. Then, append the newly scraped data to the main data frame.

page_data <- scrape_page(current_url)all_quotes <- bind_rows(all_quotes, page_data)

13. Find the next page link. The next page link can be found by targeting the HTML node associated with the "Next" button and getting the href value. The page counter is also incremented, and the loop function ends here.

next_page <- read_html(current_url) %>%html_node(".pager .next a") %>%html_attr("href")page_count <- page_count + 1}

14. Print and save the results. As the last step, print the results in the console and save them to a CSV file.

print(all_quotes)write.csv(all_quotes, "quotes.csv", row.names = FALSE)

The full script:

library(rvest)library(tidyverse)base_url <- "https://quotes.toscrape.com"scrape_page <- function(base_url) {page <- read_html(base_url)quotes <- page %>%html_nodes(".quote .text") %>%html_text(trim = TRUE)authors <- page %>%html_nodes(".quote .author") %>%html_text(trim = TRUE)tags_per_quote <- page %>%html_nodes(".quote .tags") %>%html_text(trim = TRUE) %>%gsub("Tags: ", "", .)all_quotes <- data.frame(Quote = quotes,Author = authors,Tags = tags_per_quote)return(all_quotes)}next_page <- "/"page_count <- 1all_quotes <- data.frame(Quote = character(),Author = character(),Tags = character(),stringsAsFactors = FALSE)while (!is.null(next_page) && page_count <= 10) {current_url <- paste0(base_url, next_page)message("Scraping page ", page_count, ": ", current_url)page_data <- scrape_page(current_url)all_quotes <- bind_rows(all_quotes, page_data)next_page <- read_html(current_url) %>%html_node(".pager .next a") %>%html_attr("href")page_count <- page_count + 1}print(all_quotes)write.csv(all_quotes, "quotes.csv", row.names = FALSE)

Data cleaning and storage

After you're done scraping data, it can be easily accessed by loading the CSV file into R:

read.csv("file_name.csv")

At this stage, you no longer need to repeat the scraping process each time you need the data. Instead, you can work directly with the CSV file, applying various operations to clean and manipulate the data. Here are some common and useful ones:

- Handle missing data. Use na.omit(data) to remove rows with missing values or data[is.na(data)] <- 0 to replace them with a default value.

- Rename columns. For better readability, you can use colnames(data) <- c("NewName1", "NewName2") to assign meaningful column names.

- Convert data types. data$column <- as.numeric(data$column) or as.character(data$column) ensures columns are in the correct format for analysis.

- Removing duplicates. Use data <- distinct(data) (from the dplyr package) to eliminate duplicate rows and keep unique records.

- Export cleaned data. Once you're done with manipulating and cleaning data, use write.csv(data, "cleaned_data.csv", row.names = FALSE) to save the dataset for future use.

Common challenges and how to overcome them

Web scraping with R can be powerful, but it comes with its own set of challenges, from handling dynamic content to dealing with messy data. Here are some common issues you might encounter and ways to tackle them:

- Blocked requests. Websites may detect and block scraping attempts. Try adding user-agent headers with httr::GET() or introducing delays between requests to avoid detection. You can add user-agent headers, introduce delays between requests, or use proxies to overcome limitations and avoid detection. Here's an example of how to implement them with the rvest library:

library(rvest)url <- "http://example.com"page <- read_html(GET(url, use_proxy("http://proxy_ip:port")))

- Inconsistent HTML structure. Web pages may have varying layouts, making it hard to extract data reliably. Use html_nodes() with more specific selectors or xpath for better targeting.

- Encoding issues. Some pages use special characters that don’t render correctly in R. Use Encoding(data$column) <- "UTF-8" to fix character encoding problems.

- Messy or incomplete data. Scraped data often contains missing values or extra whitespace. Use the various data cleaning methods mentioned before to remove unwanted characters and ensure data quality.

Residential proxies with a 3-day free trial

Test our award-winning proxies with 100MB and effortlessly bypass blocked requests, restrictions, and detection.

End note

That's everything you need to get started with web scraping in R. You've learned how to extract data from static pages, tables, and dynamic content, equipping you to handle any type of website.

Apply best practices for cleaning, organizing, and storing your data while overcoming web limitations with headers, request delays, and proxies. By making full use of R's powerful data manipulation tools, you can quickly turn raw web data into actionable insights.

About the author

Zilvinas Tamulis

Technical Copywriter

A technical writer with over 4 years of experience, Žilvinas blends his studies in Multimedia & Computer Design with practical expertise in creating user manuals, guides, and technical documentation. His work includes developing web projects used by hundreds daily, drawing from hands-on experience with JavaScript, PHP, and Python.

Connect with Žilvinas via LinkedIn

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.