lxml Tutorial: Parsing HTML and XML Documents

Keepin’ it short and sweet: data parsing is a process of computer software converting unstructured and often unreadable data into structured and readable format. Parsing offers a lot of benefits, some of which include work optimization, saving time, reducing costs, and many more; in addition, you can use parsed data in plenty of different situations.

Even tho that sounds epic, parsing itself can be quite complicated. But hold on, buddy, and get ready to explore a step-by-step process on how to parse HTML and XML documents using lxml.

What is HTML and XML?

HTML

HTML (or Hypertext Markup Language) is a markup language that helps create and design web content. A hypertext is a text that allows a user to reference other pieces of text. A markup language is a series of markings that define elements within a document.

HTML’s focus is to display data, so it slaps when web users want to create and structure sections, paragraphs, and links. Its document has the extension .htm or .html. Just FYI, this is how HTML code looks like:

<html><body><h1>Smartproxy’s lxml Tutorial: Parsing HTML and XML Documents</h1><p>This is how HTML code looks like</p></body></html>

XML

XML stands for Extensible Markup Language. Simply put, its main focus is to store and transport data. The language is self-descriptive, as it may have sender and receiver info, a heading, a message body, etc. An XML document has the extension .xml. Here’s an example of XML code:

<example><heading>Smartproxy’s lxml Tutorial: Parsing HTML and XML Documents</heading><body>This is how XML code looks like</body></example>

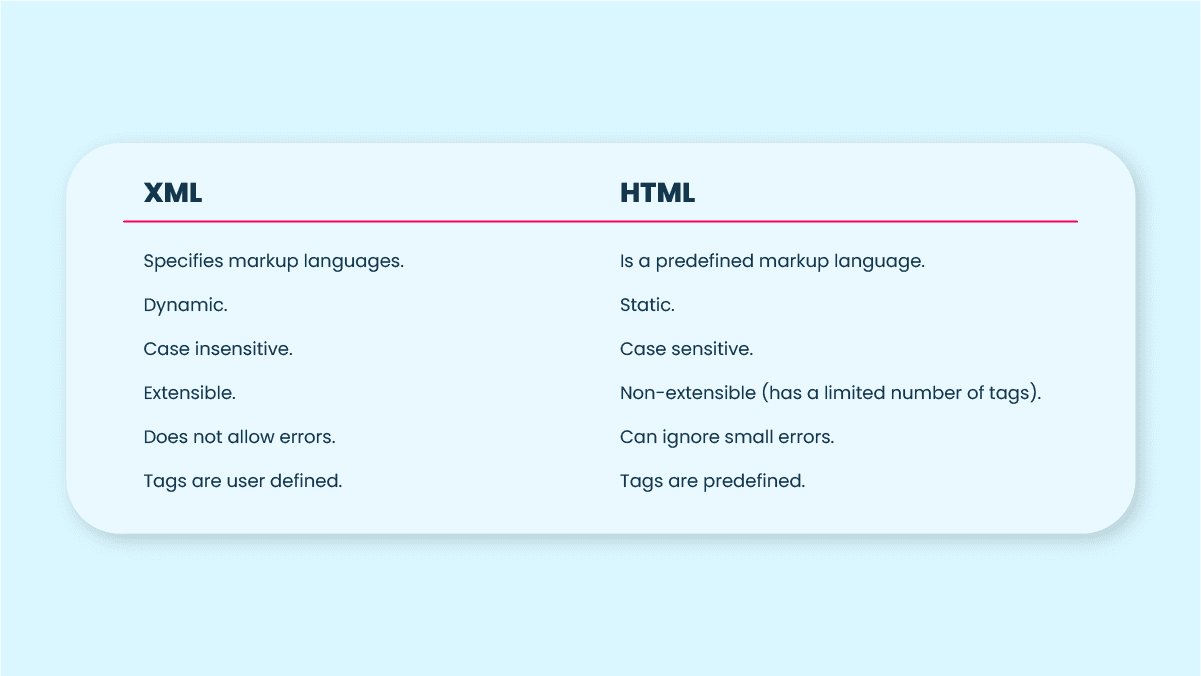

Difference between XML and HTML

Let’s set the record straight: while both XML and HTML are markup languages, they have quite a few differences. Let’s explore some of them.

What is lxml?

If you've never heard of lxml before, don’t sweat. The name may sound confusing but the explanation is simple.

See, lxml is a Python library that allows you to easily and effectively handle XML and HTML files. It refers to the XML toolkit with a Pythonic binding for the two C libraries: libxml2 and libxslt. lxml combines the speed and XML libraries’ features with the simplicity of a Python API.

It’s not the only library you can choose; however, lxml stands out by its ease of programming and performance. It has easy syntax and adaptive nature; in addition, reading and writing any size XML files is as fast as light. Well, almost!

Parse HTML and XML documents: lxml tutorial

Welp, this is where things get real. But don’t worry – we’ll get into the tutorial step by step.

First step: Install Python

OK, the first thing you need to do is to download and install Python on your computer. Without Python, lxml won’t have an environment to function in.

Second step: Install lxml

There are several ways to install lxml:

If you’re on Linux, simply run:

sudo apt-get install python3-lxml

For MacOS-X, a macport of lxml is available:

sudo port install py27-lxml

- pip.

To install lxml via pip, try this command:

pip install lxml

- apt-get.

Linux or macOS users can give a shot to this:

sudo apt-get install python-lxml

Third step: Create XML/HTML objects with ElementTree

1. Import ElementTree with this command:

from lxml import etree

2. Create tree elements:

root = etree.Element("html")body = etree.Element("body")heading = etree.Element("h1")paragraph = etree.Element("p")

3. Set element values and assign dependencies:

body.set("text", "teal")heading.text = 'A heading'paragraph.text = 'A paragraph'paragraph.set("align", "center")root.append(body)body.append(heading)body.append(paragraph)

4. Print the structured HTML to the console:

print(etree.tostring(root, pretty_print=True).decode())

That’s what you’ll print:

<html><body text="teal"><h1>A heading</h1><p align="center">A paragraph</p></body></html>

5. Convert the HTML object we created to a string which we will use later on.

html_string = etree.tostring(root)

Fourth step: Parse XML/HTML documents

1. Create an HTML object from string. You can do the same with XML:

html = etree.fromstring(html_string)

2. Retrieve text from the paragraph with find():

paragraph_text = html.find("body/p").text

That’s what you’ll print:

print(paragraph_text)

3. Retrieve text from the heading with xpath():

heading_text = html.xpath("//h1")[0].text

That’s what you’ll print:

print(heading_text)

Conclusion

Voila! Your nerd quotient in parsing HTML and XML documents has exponentially increased. But don’t forget to respect the site’s policy, to mind your browser fingerprinting, and to use essential tools, such as proxies. Not only will the proxies help ya avoid CAPTCHAs, IP bans, or flagging, but will also ensure your anonymity and the best parsing results.

About the author

James Keenan

Senior content writer

The automation and anonymity evangelist at Smartproxy. He believes in data freedom and everyone’s right to become a self-starter. James is here to share knowledge and help you succeed with residential proxies.

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.