How To Choose The Right Selector For Web Scraping: XPath vs CSS

If you're fresh-new to web scraping, you may not be familiar with selectors yet. Let us introduce ya – selectors are objects that find and return web items on a page. These pieces are an essential part of a scraper, as they affect your tests' outcome, efficiency, and speed.

Yep, understanding the idea of a selector isn't that complicated. Finding the right selector itself might be. To be honest, even the two languages that define them, XPath and CSS, have their own pros and cons. So it can quickly become a headache to choose one of them. But here's some good news – we're here to help! Let's explore it together.

What is an XPath selector?

XPath means XML Path. In essence, it’s a query language that uses non-XML syntax to make it easy for you to identify elements in XML documents.

You may have already tried to guess if there’s a meaning behind XML Path’s name. The answer is… yes! The selector uses expressions so that they can be traced from the start of the document to the intended element. BINGO – that seems like forming a path.

XPath might be your best buddy in plenty of contexts – it’s applicable to provide links to nodes, search repositories, and many other applications. There are two ways to create an XPath:

Absolute

An absolute XPath starts with the / symbol. It’s usually quite long and hard to maintain.

It has a complete path, beginning from the root to the element that you wanna identify. It’s worth mentioning, however, that if there’s any change in attributes starting from the root, the absolute XPath will become invalid. You need to watch out.

Relative

A relative XPath starts with the // symbols. It’s shorter than the absolute XPath.

It begins by referring to the element you wanna identify, not from the root node. If you remove or add the element, you won’t affect the relative XPath. That is why it works well with automation.

Let’s take a look at a basic format of XPath (this one is the relative one, tho!) and try to understand its syntax:

//tagname[@attribute=’value’]

This is what it means:

- //: the current node;

- tagname: the type of HTML element;

- @: attribute selector;

- attribute: the attribute of the node;

- value: the value of the attribute.

XPath: pros and cons

Pros

XPath has some dope features to offer. It allows navigating up the DOM when lookin’ for elements to scrape. If you don’t know the name of an element, you don’t have to sweat – you can use contains to search for possible matches. Oh, and by the way – XPath slaps even when scraping with old browsers, including ancient versions of Internet Explorer.

Cons

Nothing is perfect. XPath isn’t an exception. The biggest con of it is being easily-breakable. XPath is also slow, and its complexity can make it hard to read.

What is a CSS selector?

In a nutshell, CSS stands for Cascading Style Sheets – a text-based language. It allows you to control various style elements, functionalities, and the formatting and display of HTML docs. So, thanks to CSS, you can choose the color or the font of the text, the spacing between paragraphs, the column sizes, and much more.

When identifying the various elements on the page based on styles, you select the class it falls into. CSS lets you pick out the type of content to test, edit, and copy. In other words, selectors help you target the HTML elements you wanna style.

CSS selectors come in several types and have actual names and categories:

Simple

These selectors pick up elements based on their class or ID. They include only one element in the structure.

Attribute

Attribute selectors search for elements based on the values assigned to them. These have symbols or spaces to separate elements.

Pseudo

You choose pseudo selectors when the states of elements are declared with CSS. They use keywords that you add to select a specific part or state of the element.

Let’s see a simple example of CSS:

tagname[attribute=value]

Here,

- tagname: the type of HTML element;

- attribute: the attribute of the node;

- value: the value of the attribute.

CSS: pros and cons

Pros

CSS may be your go-to selector for various reasons. First of all, you can use CSS on the development side. It’s also compatible with most browsers. Finally, with CSS, you have a high chance to find the elements you want.

Cons

As there are so many levels of CSS, it can create confusion among web browser developers and beginners.

Nodes and relationships: XPath and CSS

Here’s the thing: you can really start web scraping without having a basic knowledge about it. So, fasten your seatbelt, and let’s learn the beginner terminology for both – XPath and CSS.

Let’s start with an example:

<html><head><title>How To Choose The Right Selector For Web Scraping: XPath vs CSS</title></head><body><h2 id="name">Smartproxy.com</h2><div id="navbar"><a href="https://smartproxy.com/" id="smart" class="nav">Visit Smartproxy</a><a href="https://smartproxy.com/blog" class="nav">Check out our Blog</a><a href="https://smartproxy.com/web-scraping" class="nav">Learn more about web scraping</a></div></body></html>

Nodes

Nodes represent entities of a domain. Every XML or HTML has three types of nodes:

- Element Node. They’re simply known as elements, or tags. From the code above:

<title>How To Choose The Right Selector For Web Scraping: XPath vs CSS</title>

- Attribute Node. They’re the attributes of an element node. For instance,

id="smart"

- Atomic Value. They’re the final values. These are represented by a text or a value of an attribute. For example, it can be:

- Parent. This is a root element that is one level up in the code. Each element has exactly one parent: the parent of <a> element is <div>.

- Children. This is a sub-element that is one level down in the code: the elements <h2> and <div> are children of the <body>.

- Siblings. These are the elements that share the same parent: <h2> and <div> are siblings, as they share the parent <body>

- Descendants. These are all the elements that are any level down: the element <title> is the descendant of <head>.

- Ancestor. This is the element that is any level up: ancestors of <a> are <div>, <body>, <html>.

“Learn more about scraping”.

Relationships

There are more types of nodes, such as entity nodes, CDATA section nodes, document nodes, and others. All of these nodes can have different kinds of relationships with each other. Every relationship must have exactly one relationship type.

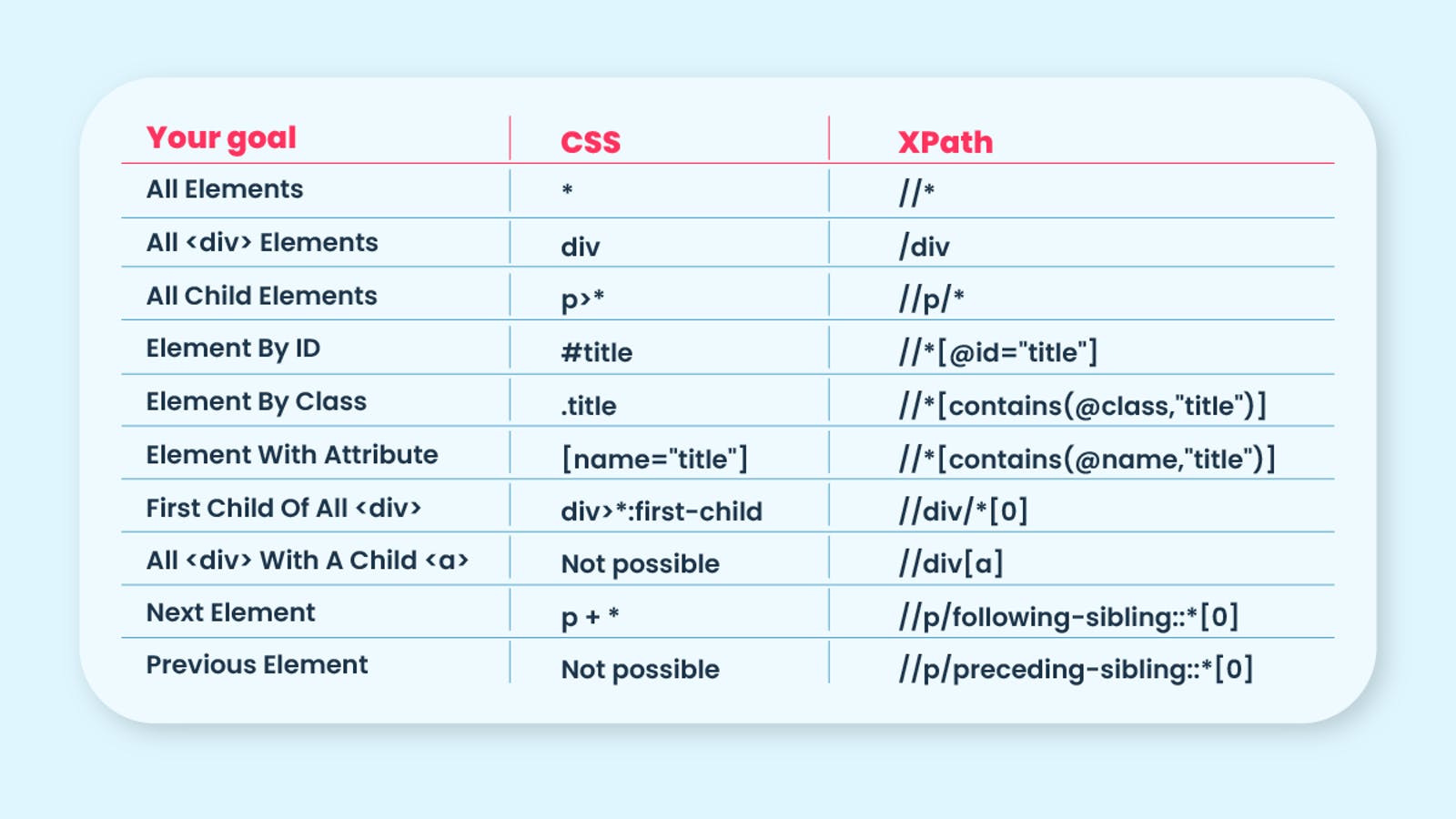

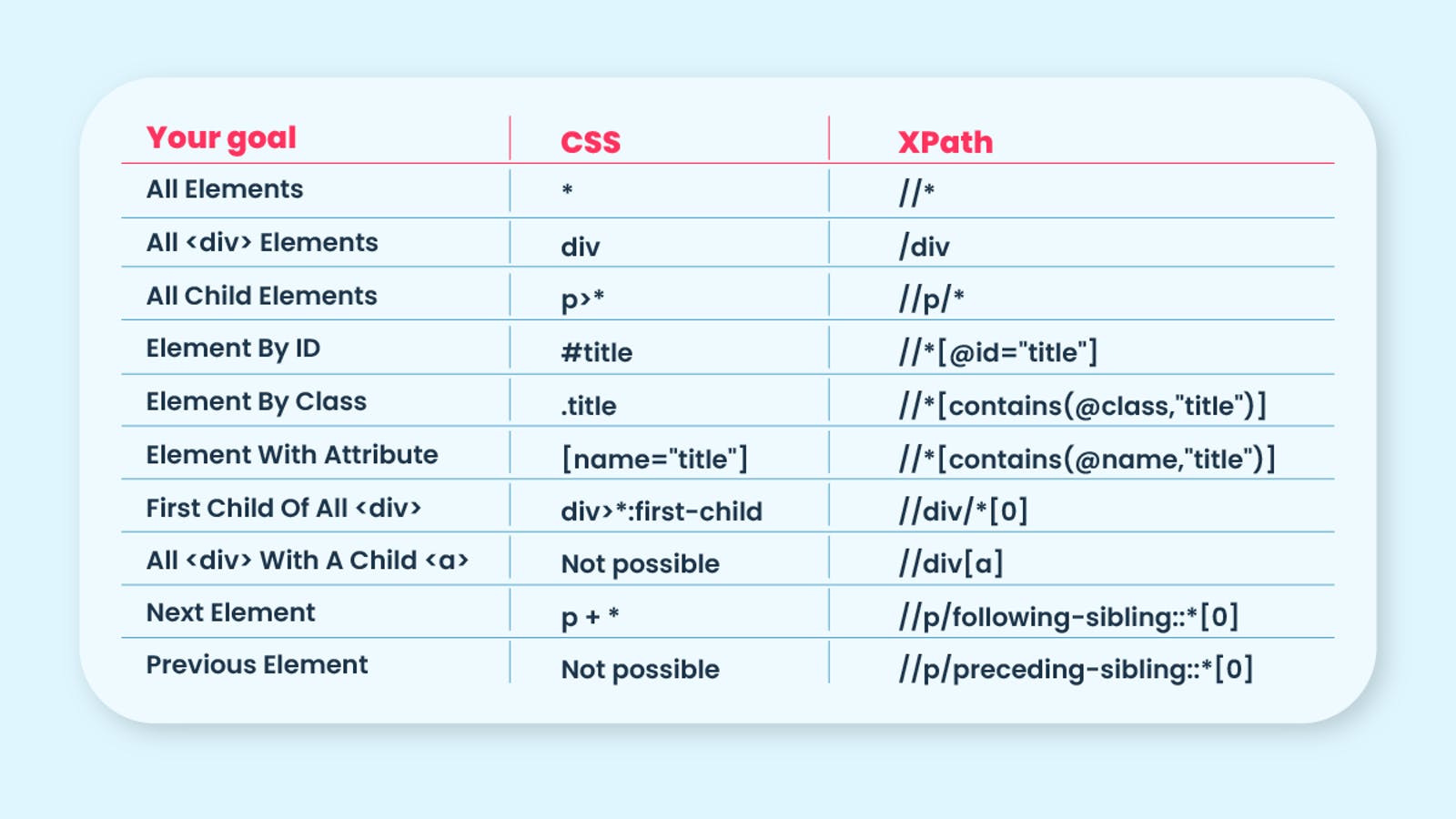

Comparison: XPath vs CSS

Yeah, we got familiar with both of the selectors, but wait! Before jumping to conclusions on which one to choose, let’s compare them.

- Complexity. XPath tends to be more complex and harder to read than CSS. It makes CSS easier to learn and implement.

- Performance and speed. XPath is much slower when we compare it to the CSS selector.

- Consistency. XPath engines are different in every browser, which makes a selector inconsistent. On the other hand, a variety of sites have applied CSS consistently.

- Text recognition. XPath handles text recognition better than CSS.

- Flow. With XPath, you can traverse both ways from parent to child and the other way around. CSS allows one-directional flow – from parent to child only.

Choose your character

It’s finally the time to choose your character! While web scraping, we’d suggest not focusing on one or a couple of benefits too much. Your choice should depend on your situation and factors, including finding the required feature-set or compatibility. So, try to see the complete picture and explore both options yourself.

While using web scraping tools, such as Beautiful Soup, consider the find and find_all methods. They’re optimized for Beautiful Soup – it means there’s no need to worry about choosing between two selectors.

Conclusion

Choosing the right selector may be difficult but surely important when web scraping. For publicly accessible data gathering, you also need suitable tools for that. For Google’s SERP results, pick our full-stack SERP Scraping API. We also have eCommerce and Web Scraping APIs if your targets are not limited only by search engines. All our APIs deliver results at a 100% success rate and are armed by our elite proxies for your ultimate data gathering experience.

In case you already have your scraping proxies, scale your project by increasing speed and IP stability with datacenter proxies. And go for residential proxies for unblocking data in any location and scraping the websites that are extra sensitive to automated activity.

About the author

James Keenan

Senior content writer

The automation and anonymity evangelist at Smartproxy. He believes in data freedom and everyone’s right to become a self-starter. James is here to share knowledge and help you succeed with residential proxies.

All information on Smartproxy Blog is provided on an "as is" basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may be linked therein.