Master VBA Web Scraping for Excel: A 2025 Guide

Excel is an incredibly powerful data management and analysis tool. But did you know that it can also automatically retrieve data for you? In this article, we’ll explore Excel's many features and its integration with Visual Basic for Applications (VBA) to effectively scrape and parse data from the web.

What is web scraping?

Web scraping is the process of making automated requests to websites, reading their content, and retrieving data from them. The data can then be parsed and structured into easy-to-read formats that significantly boost the efficiency of data analysis and drawing insights. Many applications and code solutions are available for various use cases and targets. In this article, we’ll explore a few methods available with Microsoft Excel.

Method 1: Using Excel web queries for data extraction

Excel has a built-in feature called web queries that lets you get information from websites directly. It’s an easy way to get data from structured tables found on web pages. The web query uses a built-in browser to load the website for you, so it’s an excellent solution for dynamic websites where content needs to be loaded.

Let’s use a page from the Books to Scrape website as an example. To get information from it, follow these steps:

- Open a blank spreadsheet in Microsoft Excel.

- Click on the Data tab.

- Select From Web inside the Get & Transform Data section.

- Enter the URL of the web page from which you want to get data and click OK.

- Select how you want to access the web content. You can choose between Anonymous, Windows, Basic, Web API, and Organizational account. Since accessing this website doesn’t require authentication or extra steps, simply go with Anonymous and click Connect.

- In the newly opened Navigator window, you can see a list of tables that Excel found on the website. Select Table 0 and click Load.

Just like that, the table is successfully loaded into the Excel spreadsheet. As you may have noticed, this method only imports tables from web pages. However, data comes in many different shapes and sizes, so it isn’t a perfect way to get everything you need. You’ll need solutions to get data from paragraphs, lists, and other HTML elements.

Method 2: Using automated web scraping tools

What’s a better tool for the job, if not a tool made precisely for the job? Web scrapers are applications built to scrape various websites with ease. You only need to provide the target you want to scrape and give it a few parameters and information it should return.

You may wonder how these tools can be used together with Microsoft Excel. Thankfully, many web scraping tools can export results in CSV or similar file formats, which Excel can read and open. This saves you the hassle of trying to make Excel spreadsheets do all of the web scraping with limited abilities, but it comes at the cost of relying on external tools that may not always be compatible with Excel.

Learn more about scraping tools and APIs and how they can help you gather data.

Method 3: Using Excel VBA for data extraction

Visual Basic for Applications (VBA) is a programming language integrated into various Microsoft applications, including Excel. It allows you to write custom scripts for automation, customization, and integration with other Microsoft Office applications. VBA is also a powerful tool for web scraping, as it can make HTTP requests to websites and retrieve the HTML content. It can also parse the content, extract the desired data, and present it nicely in an Excel spreadsheet.

Pros and cons of utilizing VBA for web scraping

Pros

- Integration. VBA integrates with Microsoft Office applications like Word or Excel, allowing you to automate workflows without needing a separate programming language or tool.

- Familiarity. Many professionals who work with data are already familiar with Excel and may have basic VBA knowledge, making it easy to grasp without extensive training.

- Customization. VBA provides a high level of customization, allowing you to tailor your web scraping scripts to specific websites and data extraction requirements.

- Rapid prototyping. With VBA, you can quickly prototype and test web scraping scripts directly within Excel, enabling faster development cycles and iteration.

- No additional software. Since VBA is built into Microsoft Office applications, you don't need to install or maintain additional software or dependencies to perform web scraping tasks.

- Limited capabilities. VBA has limitations compared to more advanced programming languages and web scraping tools. It may struggle with complex websites with dynamic content or JavaScript-heavy pages.

- Fragility. Web scraping scripts written in VBA can be fragile and prone to breaking if the structure of the target website changes. This requires frequent maintenance and updates to keep the scripts functioning correctly.

- Performance. VBA may not be the most efficient choice for large-scale or complex web scraping tasks, as it lacks some of the performance optimizations and concurrency features in other programming languages and tools.

- Security concerns. VBA scripts can pose security risks if they're not adequately secured or interact with sensitive data or external resources without proper authorization.

- Learning curve. While VBA may be familiar to Excel users, mastering web scraping techniques in VBA still requires learning new concepts and programming skills, especially for more advanced scraping tasks.

Cons

How to scrape data from a website with VBA

Set up MS Office

To get started, you must have Microsoft 365 set up on your system. It includes all of the Microsoft applications, including Excel and VBA.

Open Microsoft Excel

Open the Microsoft Excel application and select Blank workbook to create a new empty spreadsheet.

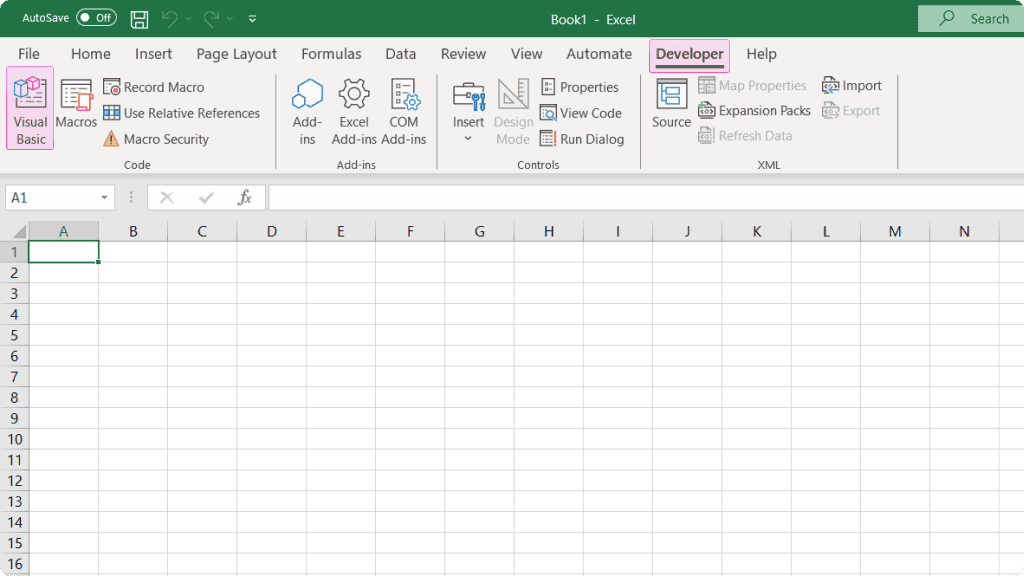

Enable the developer menu

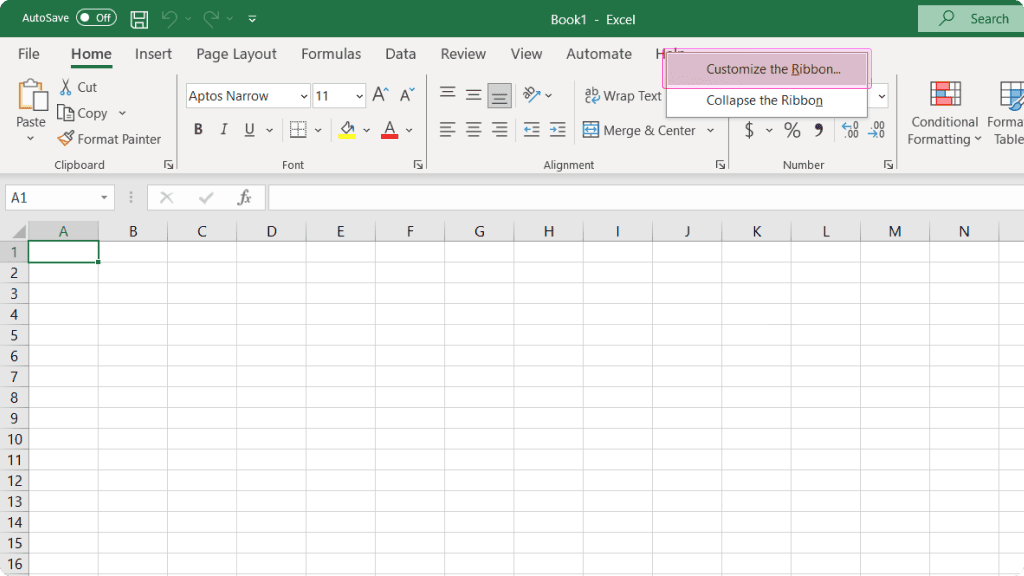

Navigate to the top of the application, where the ribbon with all the buttons is located. Right-click it and select Customize the Ribbon.

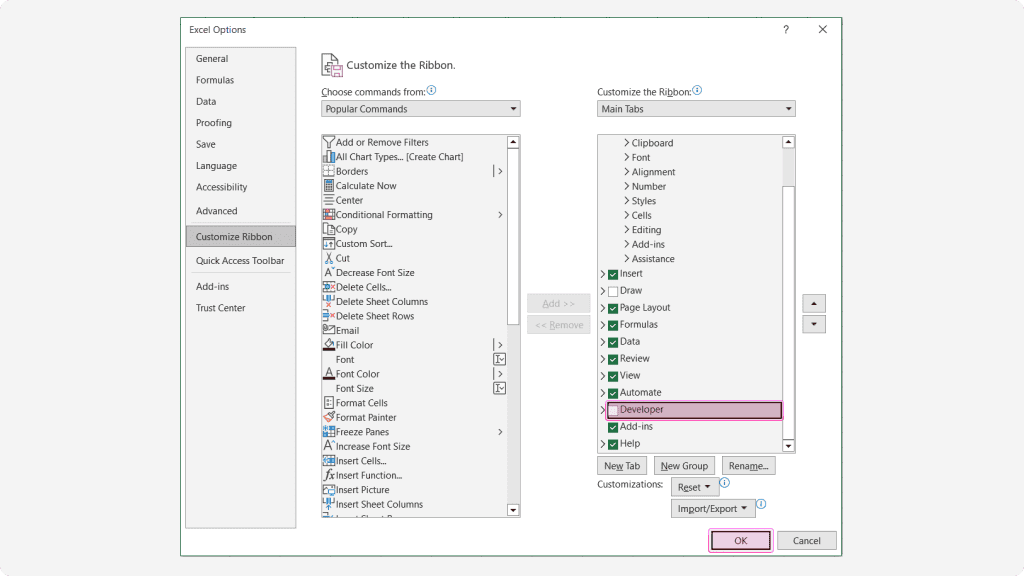

In the Excel Options window, you’ll see a list of available tabs on the right. Tick the checkbox next to Developer to enable it and click OK.

Open the Visual Basic application dialog

You’ll see the Developer tab in the ribbon when you return to the main Microsoft Excel window. Click on it to reveal various developer options. To open the VBA interface, click Visual Basic or use the keyboard shortcut Alt + F11.

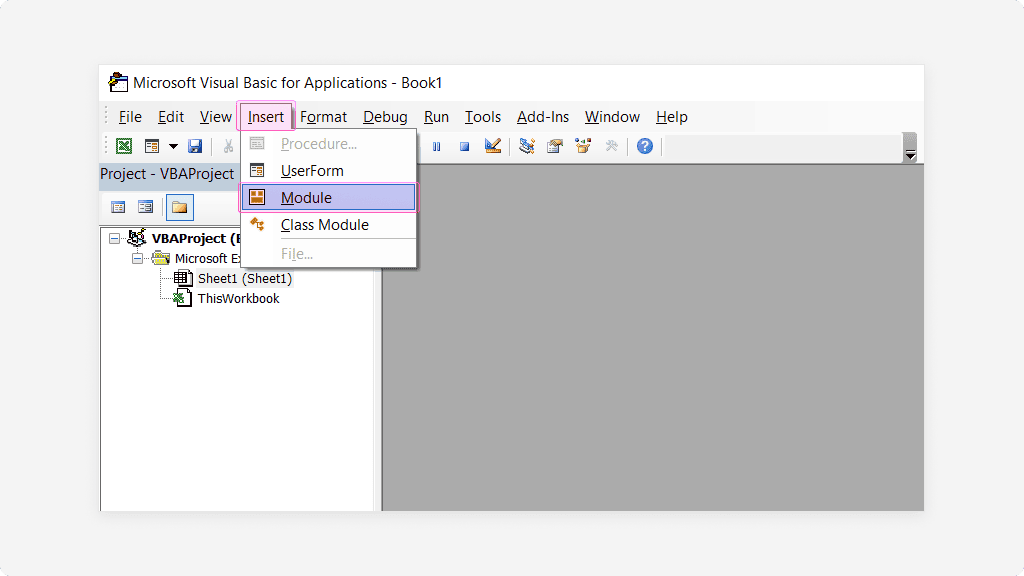

Insert a new Module

You can write scripts with VBA inside Modules. To create a new one, click Insert in the bar at the top and then Module.

A Modules folder will appear on the left with a new Module1 created and a blank area where you can write your code.

Write the script

If you’re already familiar with VBA, you can skip this section. However, for those less experienced or just starting out, you’ll need to learn some basics of VBA first. It would be impossible to cover everything in this article, but you can refer to online tutorials and courses, such as the official Microsoft learning page.

In simple terms, script execution happens in procedures. These are a set of instructions that should be executed to perform a task, such as adding two numbers together. Procedures have two types – sub-procedures and functions.

A sub-procedure is simply a set of instructions enclosed within Sub tags that tell the computer where to start executing the code and where to end it. They don’t return a value.

A function is a set of reusable code that only needs to be written once and then re-used in the code. It may or may not return a value.

If you’ve worked with another programming language before, you’ll find some familiar features, although written differently. Variables in VBA are declared with the keyword Dim and their data type (object, string, integer). To change a variable's value, use Set. You’ll also find the good old familiar conditions and loops to let your creativity flow or overflow (if you didn’t set an end condition to your while statement). Many things can be mentioned here, but that should cover the basics. Refer to the official documentation to learn more.

Automate Internet Explorer to open a website

Let’s write a simple script to open Internet Explorer, navigate to a website, and print its HTML content to the Immediate window.

Begin by creating a new sub-procedure and name it PrintHTML.

Sub PrintHTML()[Your code here…]End Sub

Inside the Sub, declare the variables you’re going to use. For this script, we’ll need an Internet Explorer object to open the browser, a URL to pass as a string, and a variable to hold the result in.

Dim Browser As ObjectDim URL As StringDim Result as String

Set the URL of the website to open:

URL = "https://example.com"

Next, create a new instance of Internet Explorer to launch and visit the web page. Set it to be visible (optional) so you can see the browser opening, navigating to the URL, and waiting for the page to load.

' Create a new instance of Internet ExplorerSet Browser = CreateObject("InternetExplorer.Application")' Make Internet Explorer visibleBrowser.Visible = True' Navigate to the specified URLBrowser.Navigate URL' Wait until the web page is fully loadedDo While Browser.Busy Or Browser.readyState <> 4DoEventsLoop

Finally, get the HTML content of the page and print it to the VBA Immediate window. Close and clean up after the task is done.

' Get the HTML content of the pageResult = Browser.document.body.innerHTML' Print the HTML content to the Immediate WindowDebug.Print Result' Close Internet ExplorerBrowser.Quit' Clean upSet Browser = Nothing

Here’s the entire script:

Sub PrintHTML()' Declare variablesDim Browser As ObjectDim URL As StringDim Result As String' Set the URL of the website to openURL = "https://example.com"' Create a new instance of Internet ExplorerSet Browser = CreateObject("InternetExplorer.Application")' Make Browser visible (for testing purposes)Browser.Visible = True' Navigate to the specified URLBrowser.Navigate URL' Wait until the web page fully loadsDo While Browser.Busy Or Browser.readyState <> 4DoEventsLoop' Get the HTML content of the pageResult = Browser.document.body.innerHTML' Print the HTML content to the Immediate WindowDebug.Print Result' Close Internet ExplorerBrowser.Quit' Clean upSet Browser = NothingEnd Sub

Run the script by clicking the green arrow icon above the script window or using the keyboard shortcut F5.

After a brief delay, you should see the HTML content printed in your Immediate window. If you don’t see it, ensure your Immediate window is opened by going to View → Immediate Window or using the keyboard shortcut Ctrl + G.

That’s all you need to get the HTML of any website. You only need to change the URL and rerun the script, and it’ll return results from a different target. However, you might notice that the data you’re getting is rather messy and complicated to read while not interacting with your Excel spreadsheet at all. In the next section, you’ll learn how to perform a more advanced task that only gets the data you need and prepares it for use immediately.

Scrape data using a VBA script and save it to Excel

To perform a more precise scraping task, you’ll need to examine the web page's HTML to find the content you need. In this example, we’ll return to the Books to Scrape website and get all the book titles on the first page.

Upon inspecting the page, you’ll see the book titles as title attributes inside the <h3> tags under the <article> elements with the class product_pod. Knowing that, you can write a VBA script to target the specific elements and print them in the Excel spreadsheet.

Here’s the extended and modified code. Refer to the comments inside the code to learn what each section does.

Sub ScrapeToExcel()' Declare variablesDim Browser As ObjectDim URL As StringDim HTMLContent As StringDim doc As ObjectDim article As ObjectDim product As ObjectDim h3 As ObjectDim link As ObjectDim scrapedData As StringDim rowNum As Integer' Set the URL of the website to scrapeURL = "https://books.toscrape.com/index.html"' Create a new instance of Internet ExplorerSet Browser = CreateObject("InternetExplorer.Application")' Make the browser visibleBrowser.Visible = True' Navigate to the specified URLBrowser.Navigate URL' Wait until the web page is fully loadedDo While Browser.Busy Or Browser.readyState <> 4DoEventsLoop' Get the HTML content of the pageHTMLContent = Browser.document.body.innerHTML' Load the HTML content into an HTMLDocument objectSet doc = CreateObject("htmlfile")doc.body.innerHTML = HTMLContent' Find all <article> elements with the class "product_pod"Set article = doc.getElementsByClassName("product_pod")' Initialize the row number for the Excel spreadsheetrowNum = 1' Loop through each <article> elementFor Each product In article' Find the <h3> element within the <article>Set h3 = product.getElementsByTagName("h3")(0)' Find the <a> element within the <h3>Set link = h3.getElementsByTagName("a")(0)' Extract the text content of the <a> elementscrapedData = link.Title' Output the scraped data to the Excel spreadsheetSheet1.Cells(rowNum, 1).Value = scrapedData' Move to the next rowrowNum = rowNum + 1Next product' Close Internet ExplorerBrowser.Quit' Clean upSet Browser = NothingSet doc = NothingSet article = NothingSet product = NothingSet h3 = NothingSet link = Nothing' Notify the user that the data has been successfully exportedMsgBox "Data has been exported to Excel.", vbInformationEnd Sub

The script launches a browser, navigates to the specified web page, and waits for it to load. It then loads the entire HTML of the site into an HTMLDocument object, from which it can find the <article> elements based on the class name. Next, it looks specifically inside them to find the titles under <h3> inside <a> tags as title attributes. Finally, it prints each title that it finds into an Excel spreadsheet.

Output

The final output is visible inside the spreadsheet. You can continue to use this data as you usually would with Excel for analysis, filtering, and other purposes. You can extract more valuable data by modifying the script, setting up scheduled scraping tasks, automating, and more.

Proxy setup

Using proxies is crucial in web scraping to avoid IP bans and rate limitations and ensure anonymity, allowing for uninterrupted data extraction from websites. Smartproxy offers various proxy types, such are residential proxies or rotating proxies, for your scraping needs that can be used while scraping with Microsoft Excel. To set up proxies on Windows, follow these steps:

- Open the Settings (Win+I).

- Click on Network & Internet.

- Navigate to the Proxy section.

- Scroll down to the Manual proxy setup section.

- Enable the Use a proxy server setting.

- Enter the Address and Port number of the proxy connection. You can get these from the dashboard.

- Click Save.

With this setup, all your HTTP requests will be made through a proxy server, regardless of application.

Conclusion

Scraping the web with Microsoft Excel presents many ways to extract data from online sources, offering the ability to leverage familiar tools like web queries and VBA. Web queries provide a straightforward method but limited option for importing data from web pages directly into Excel. At the same time, VBA offers a more customizable and versatile approach, allowing users to create tailored solutions to fit their specific needs. Web scraping with Microsoft Excel can be a valuable asset for researchers, analysts, and professionals across various industries, enabling them to harness the vast wealth of data available on the web.

Ensure that your web scraping activities are undetectable and smooth – explore our many proxy options to access websites from many different locations worldwide and remain anonymous while doing so.

About the author

Zilvinas Tamulis

Technical Copywriter

A technical writer with over 4 years of experience, Žilvinas blends his studies in Multimedia & Computer Design with practical expertise in creating user manuals, guides, and technical documentation. His work includes developing web projects used by hundreds daily, drawing from hands-on experience with JavaScript, PHP, and Python.

Connect with Žilvinas via LinkedIn

All information on Smartproxy Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may belinked therein.