Table of contents

Python Tutorial – Scraping Google Featured Snippet [VIDEO]

What do you usually do when a specific question or product pops into your mind, and you need a quick answer? You probably type it on Google and select one of the top results.

Looking at this from a business perspective, you probably want to know how Google algorithms picked those top-ranking pages since being one of them attracts more traffic. The result pages of the largest search engine in the world are an excellent source for competitors’ and market research. Every good decision is based on good research, right?

- Smartproxy >

- Blog >

- Python >

Python Tutorial – Scraping Google Featured Snippet

Feature snippets magic

If you have decided to do your research based on SERPs, you should know what kind of data you are willing to gather. We already know that results pages present various types of content, starting from the common paid and organic results to videos, related questions, or featured snippets. And this list is far from being finite.

In this blog post, we will focus on featured snippets and the level of their coolness.

Why should you care about featured snippets?

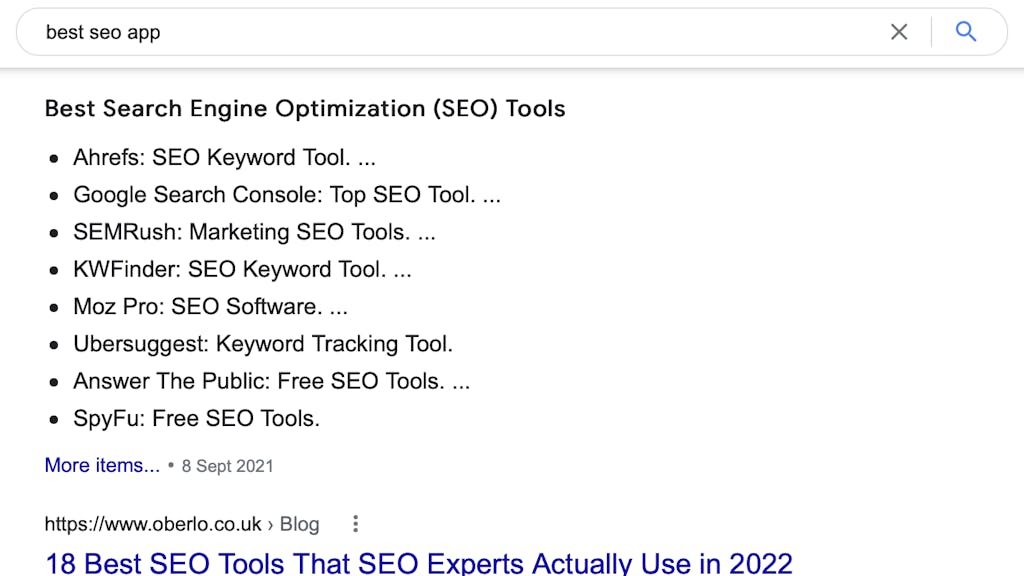

Google featured snippets are the summarized piece of information shown right below the search ads after sending the search query. Since we are used to skimming through the results quickly and pressing on the top ones, those featured snippets increase the traffic of the particular page. Those mighty pieces of content provide people with a shorter version of the answer, and if it is satisfying enough, the users tend to press on the search result to learn more about the topic.

Types of Google featured snippets

There are no fixed forms of featured snippets. In fact, many of them are floating around, but we picked the most popular ones.

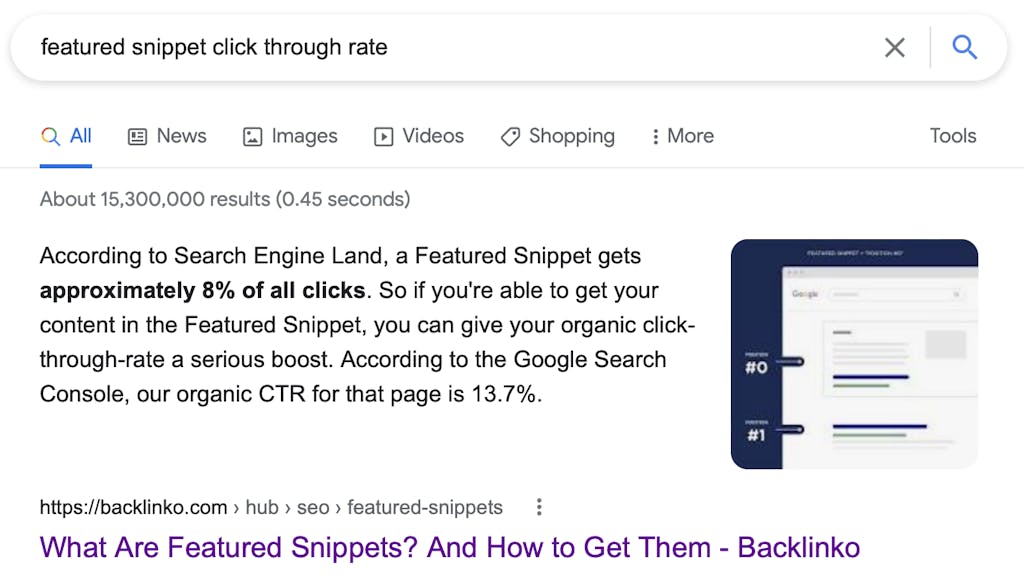

#1 Paragraph featured snippet

80% of Google featured snippets show up in the form of a short paragraph. They are created simply by picking a part of the content unit on the website, which directly answers the query. It immediately answers the main question and sparks the reader's interest to learn more. They already know that there is a greater chance to find the needed information in this place.

Pssst, a quick advice: FAQ sections and blog pages can help you ease the harshness of fighting for the featured snippets.

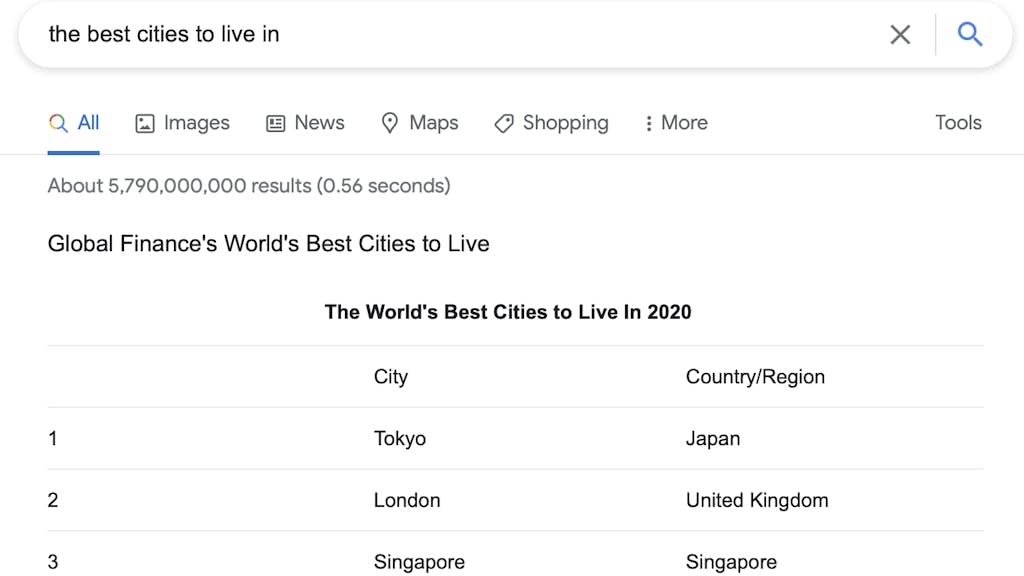

#2 The table

Such a snippet can spare you a headache if you are searching for a quick, systemized comparison of something. The table format is not necessarily shown as it is in the original content – it could be changed, focusing on adapting it to better answer the query. So, if you want to win the particular query by earning a featured snippet, the provided content is the main thing leaving the design behind. If you have valuable lists, pricing, rates, or other data to share, your chances of earning a table featured snippet increase considerably.

#3 The list

Featured snippets shaped as lists can provide ordered or unordered information, so there is plenty of space for your imagination on how to build such informational lists. You can use simple listicle articles, recipes, how-to tutorials, and feature lists.

Why are featured snippets such a big deal?

Featured snippets usually outrank other results, automatically attracting more traffic and increasing click-through rate. Consequently, it could be a razor-sharp marketing move if your generated content can be "promoted" to the top places of search result pages. Featured snippets could be your answer on how to win those first positions.

You can find a lot of tips on how to improve your SEO game out there. Searching for relevant keywords with low difficulty, creating valuable content, and structuring it based on your target audience's searching habits is essential. But where exactly should you start?

There are a lot of hacks floating around about how to optimize your page for those featured snippets, but sometimes you do not know what tactic should be applied first. It is always good to keep an eye on your competitors, so you can start from a competitor or overall market research.

Scraping for market research

Knowing how powerful and sophisticated Google is, it would be naive to expect that scraping their results at scale is as easy as apple pie. An automated scraping solution is a smart move, however, if you make too many requests from one IP address you’ll quickly get blocked without finishing your greater goal.

We have good news for you – Smartproxy rocks premium-quality SEO proxies together with a full-stack scraping tool. Our SERP Scraping API includes a proxy network, web scraper, and a data parser. The full-stack solution helps you collect any desired information from SERPs, including Google. With this tool, you can run the research smoothly, since we have picked the best residential, mobile, and datacenter proxies for you. Keep reading as this Python tutorial will help you try out our SERP Scraping API right away.

By the way, we have a bunch of Python tutorials about scraping, including how to scrape Google SERP, so better check them out if you want to start with more simple things. If Python is not your thing, we prepared a detailed tutorial on how to scrape with cURL and Terminal, so you can choose which scraping technique is better for you.

Step-by-step guide for scraping Google featured snippets with Python

For this tutorial, we will use our SERP Scraping API’s real-time integration. Don’t worry, it's beginner-friendly! If you need code examples for your query, you can check them on our real-time integration page. This tutorial is based on Python, however, you can find code examples for Node and PHP, if you are willing to try other programming languages.

Let’s jump straight to the code:

Step 1: Prepare to use SERP Scraping API

Import requests and the pprint library to receive the nicer output of the query results.

import requests

from pprint import pprint

To start using our SERP Scraping API, add your credentials.

username = 'SPusername'

password = 'SPpassword’

Step 2: Set up queries

After completing the primary steps, create a list of queries you want to scrape from Google. For this example, we used five different search queries that could provide featured snippets as the results.

queries = ['best seo app',

'best residential proxies',

'pizza near me',

'dental clinic',

'best chicken wings in the area']

Add the necessary header as indicated below. After this, do not forget to initialize a featured_snippets dictionary as a place where the gathered data is going to be stored.

headers = {

'Content-Type': 'application/json'

}

featured_snippets = {}For now, your code should look like this:

import requests

from pprint import pprint

username = 'username'

password = 'passsword'

queries = ['best seo app',

'best residential proxies',

'pizza near me',

'dental clinic',

'best chicken wings in the area']

headers = {

'Content-Type': 'application/json'

}

featured_snippets = {}

Step 3: Build the query request

When the setup part is done, to get the desired results, we will need to perform a POST request to the SERP Scraping API and repeat this action for all the chosen queries.

We choose a POST request as this method allows us to send the data to the server and scrape according to the pre-set parameters posted in the request.

response = requests.post(

'https://scrape.smartproxy.com/v1/tasks',

As we have already written our header parameter in the previous step, we can leave the following code part like this:

headers = headers,

json = {

Since our primary target is Google featured snippets, we identify the target as Google search (google_search) and the domain as .com for the same reason.

'target': 'google_search',

'domain': 'com',

For more specific research, you can identify the location. By the way, location is not the only parameter you can use to filter up your results. Learn more about it in our documentation.

Since we are searching the specific queries from the list above, in the query part just leave “query”. If you would like to receive the results neatly parsed instead of raw HTML, mark “true” in the parse parameter.

'geo': 'London,England,United Kingdom',

'query': query,

'parse': True,

},

Lastly, identify yourself using the same username and password to run the query.

auth = (username, password)

The whole code for step 3 looks like this:

response = requests.post(

'https://scrape.smartproxy.com/v1/tasks',

headers = headers,

json = {

'target': 'google_search',

'domain': 'com',

'geo': 'London,England,United Kingdom',

'query': query,

'parse': True,

},

auth = (username, password)

)

Step 4: Filtering featured snippets results

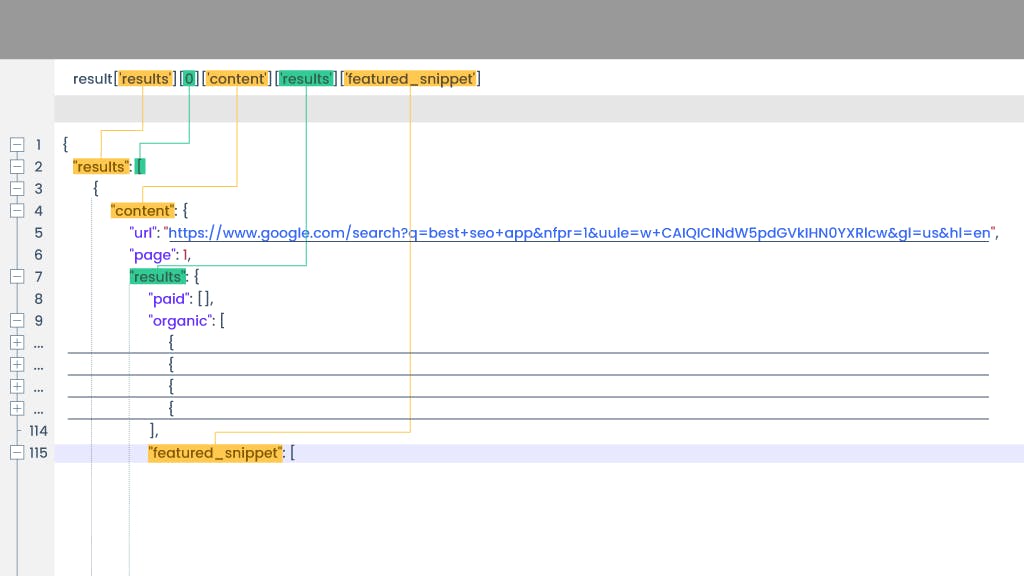

The raw results are not enough if we seek to gather featured snippets in particular. Since the scraper returns all the results, we need to set a condition to find featured snippets among them.

Firstly, assign the json as output to the "result" variable:

result = response.json()

Then, check if you got a featured snippet in the result:

if 'featured_snippet' in result['results'][0]['content']['results']: featured_snippets[query] = (result['results'][0]['content']['results']['featured_snippet'])

To get a more eye-friendly formatting, add the same pprint method as before:

pprint(featured_snippets)

Voila! If you have done everything correctly, you should end up with a list of featured snippets you have been searching for.

C:\serps>python featured_snippets.py

{'best residential proxies': [{'desc': 'Top 9 Residential Proxy Providers\n'

'OxyLabs. ... \n'

'GeoSurf. ... \n'

'Bright Data. ... \n'

'Smartproxy. ... \n'

'NetNut. ... \n'

'StormProxies. ... \n'

'RSocks. ... \n'

'Shifter. Shifter, which claims to have'

'the largest pool of peer-to-peer'

'connections on the Internet, with 31'

'million IP addresses, has won the'

'Internet vote of confidence for many'

'users.\n'

'More items... \n

'•\n'

'Jul 8, 2021',

'pos_overall': 5,

'title': 'The Top 9 Residential Proxy Service'

'Providers',

'url': 'https://www.webscrapingapi.com/top-residential-proxy-providers/',

'url_shown': 'https://www.webscrapingapi.com> '

'top-residential-prox...'}],

'best seo app': [{'desc': 'Best Search Engine Optimization (SEO) Tools\n'

'Ahrefs: SEO Keyword Tool. ... \n'

'Google Search Console: Top SEO Tool. ... \n'

'SEMRush: Marketing SEO Tools. ... \n'

'KWFinder: SEO Keyword Tool. ... \n'

'Moz Pro: SEO Software. ... \n'

'Ubersuggest: Keyword Tracking Tool.\n'

'Answer the Public: Free SEO Tools. ... \n'

'SpyFu: Free SEO Tools.\n'

'More items... \n'

'•\n'

'Sep 8, 2021',

'pos_overall': 1,

'title': '18 Best SEO Tools That SEO Experts Actually Use'

'in 2022',

'url': 'https://www.oberlo.com/blog/seo-tools',

'url_shown': 'https://www.oberlo.com> Blog'}]}

C:\serps>

The entire code:

import requests

from pprint import pprint

username = 'username'

password = 'passsword'

queries = ['best seo app',

'best residential proxies',

'pizza near me',

'dental clinic',

'best chicken wings in the area']

headers = {

'Content-Type': 'application/json'

}

featured_snippets = {}

for query in queries:

response = requests.post(

'https://scrape.smartproxy.com/v1/tasks',

headers = headers,

json = {

'target': 'google_search',

'domain': 'com',

'query': query,

'parse': True,

},

auth = (username, password)

)

result = response.json()

if 'featured_snippet' in result['results'][0]['content']['results']:

featured_snippets[query] = (result['results'][0]['content']['results']['featured_snippet'])

pprint(featured_snippets)

Yay, you're done! Check our video with another great Python tutorial if you need more information on scraping the mighty Google with our SERP Scraping API.

Loading video...

Wrapping up

Great, now you have fresh material for your research. Don’t forget that you can scrape a bunch of cool stuff with the SERP Scraping API – top stories, popular products, real-time ads, and more.

By the way, you can always explore our API documentation for great examples. And if you have any specific questions, our round-the-clock support heroes are more than ready to help you fix everything.

James Keenan

Senior content writer

The automation and anonymity evangelist at Smartproxy. He believes in data freedom and everyone’s right to become a self-starter. James is here to share knowledge and help you succeed with residential proxies.

Frequently asked questions

Is it legal to scrape Google?

Yes, the data presented on Google SERPs is publicly available; therefore, there is no crime in scraping them. As far as it's known, Google did not take any legal actions to prevent scraping. However, the largest search engine has its own methods to prevent scraping at scale from a single IP address, so proxy solutions are a must if you want to do it professionally. If you are not willing to receive a bunch of blocks, better use a full-stack solution like Smartproxy’s SERP Scraping API, which ensures a 100% success rate.

What proxies are the best to scrape Google?

The short answer is: those that ensure the delivery of the most successful results. Datacenter proxies are fast but more vulnerable to cloaking, hence residential proxies could be the better option. Just have in mind that proxies are not enough to perform scraping tasks. If you are unwilling to build your own scraper, try our SERP Scraping API, and you'll need to pay only for successful results.

How does SERP Scraping API differ from search engine proxies?

Our all-inclusive scraping tool is more than just a pool of proxies! It's a full-stack solution: a network of 65M+ residential, mobile, and datacenter proxies together with a web scraper and data parser. It’s not only easier but also a cheaper way to gather the required data and spare you a headache from all those extra tools.

Related Articles

What to do when getting parsing errors in Python?

This one’s gonna be serious. But not scary. We know how frightening the word “programming” could be for a newbie or a person with a little technical background. But hey, don’t worry, we’ll make your trip in Python smooth and pleasant. Deal? Then, let’s go! Python is widely known for its simple syntax. On the other hand, when learning Python for the first time or coming to Python after having worked with other programming languages, you may face some difficulties. If you’ve ever got a syntax error when running your Python code, then you’re in the right place. In this guide, we’ll analyze common cases of parsing errors in Python. The cherry on the cake is that by the end of this article, you’ll have learnt how to resolve such issues.

James Keenan

May 24, 2023

12 min read

Python Tutorial: How To Scrape Images From Websites

So, you’ve found yourself in need of some images, but looking for them individually doesn’t seem all that exciting? Especially if you are doing it for a machine learning project. Fret not; web scraping comes in to save the day as it allows you to collect massive amounts of data in a fraction of the time it would take you to do it manually. There are quite a few tutorials out there, but in this one, we’ll show you how to get the images you need from a static website in a simple way. We’ll use Python, some additional Py libraries, and proxies – so stay tuned.

Ella Moore

Mar 04, 2022

10 min read